Preview of the new Generative AI for Zendesk Voice

What happens if you give Zendesk AI for Voice one of IT Crowd's most confusing customer care interactions? Read the article to see the results!

One of the latest releases in a line of Generative AI releases for Zendesk is the new integration for Voice.

Phone channels are the oldest channels in a customer care team's tool belt, but it's often also the least efficient. An agent can only take 1 phone call at a time, which makes it difficult to scale. Historically, there hasn't really been a way to offer decent self-service or deflection, other than losing the customer in a labyrint of IVR choices, and if a call needs escalation of follow-up, it's not efficient to know what's already been discussed with the customer.

If an agent wants to escalate a conversation to another team or a team lead, they need to take notes on the conversation, which makes the wrap-up time for a call sometimes as long as the actual conversation itself. And getting the nuance of a conversation written down means the agent either takes notes during the call, which means less attention for the customer, or risks missing elements from the call.

Generative AI for Voice

This is where the new AI features for Voice come in. By enabling this feature, Zendesk will automatically transcribe the entire conversation and add an additional summary at the end of the call.

Whenever a call wraps up and needs to be escalated, or if you want to revisit the ticket to check how a call's been handled, to check for similar issues or what have you, agents can now read the summary, instead of relistening to a minutes long recording of the call.

Even better, you can now delete the recordings (faster) to comply with local privacy laws, while still retaining the ticket information and conversation in a written way.

Setup

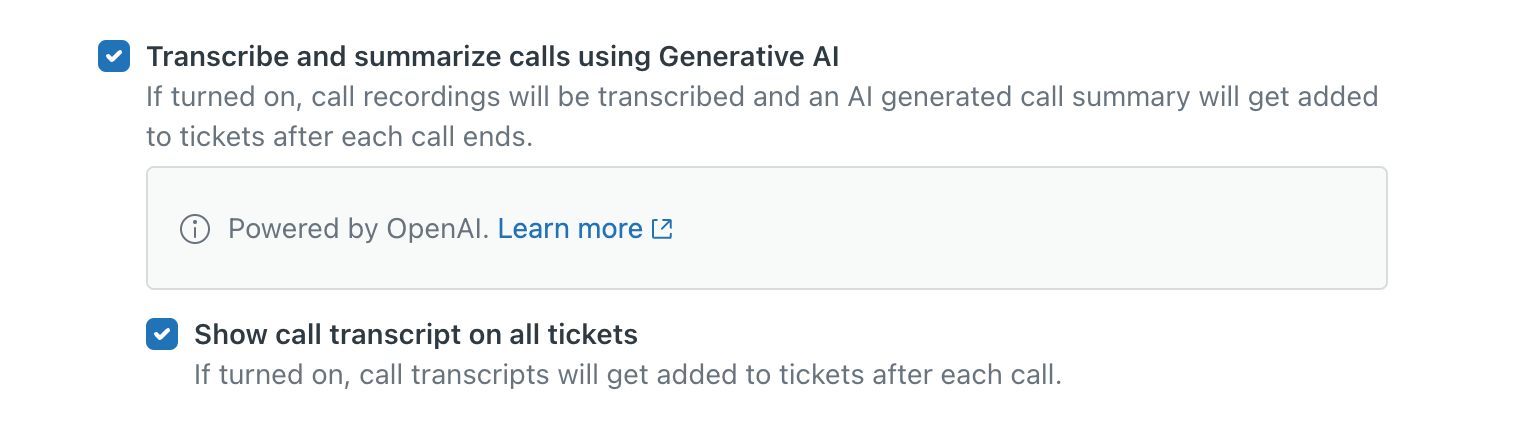

Like most things Zendesk AI, enabling Generative AI for Voice is a matter of checking a box.

The settings are hidden in Admin Panel > Channels > Talk > Settings and consistof two options:

- Enable transcribe and summarise

- Disable transcripts

There's no other settings available, so it's a global on/off for all lines available in your instance.

Fine Tuning

For testing, I recommend enabling both the transcript and the summary initially. This way, you can validate the feature (it's currently an EAP so it might have some quirks).

Later, you can disable the full transcripts if you want just the summary, but don't want to store the entire transcript for efficiency reasons.

Testing

No better way to test a Voice AI feature than to give it the world's most annoying Customer Care interaction!

So, how did Zendesk AI do?

Transcribing the conversation

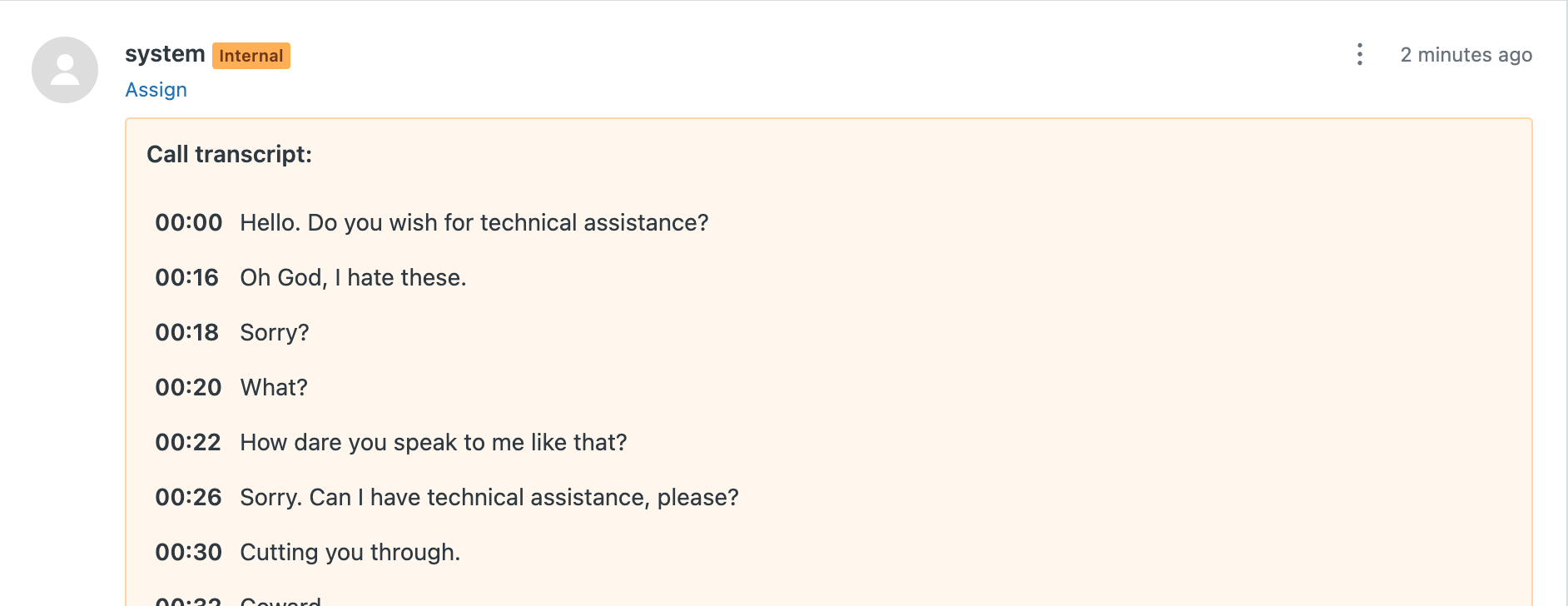

First, let's look at the call transcript:

Customer Transcript

00:00 Hello. Do you wish for technical assistance?

00:16 Oh God, I hate these.

00:18 Sorry?

00:20 What?

00:22 How dare you speak to me like that?

00:26 Sorry. Can I have technical assistance, please?

00:30 Cutting you through.

00:32 Coward.

00:36 Hiya.

00:37 Hello.

00:38 Can I have assistance?

00:41 What?

00:42 Can I have assistance?

00:45 I've got one of your laptops. It won't get past the loading screen.

00:50 Can you put it on again?

00:53 Yes. Look, is there someone else I can talk to?

00:55 There is no less valuable.

00:57 Oh, right.

00:58 With a model device.

01:00 What model do I have?

01:02 Yes.

01:03 It's a Zubion, I think.

01:05 Oh, Zubion, you're too serious.

01:07 Do you not think of me as a Zubion?

01:09 Can you put it on again?

01:11 I didn't get that.

01:13 Can you press the little wheel?

01:17 No, that would be worse somehow.

01:19 Can?

01:21 Can?

01:22 Yes. Can you press?

01:24 Delete?

01:25 Delete. Can I press delete?

01:27 Shh.

01:28 Shh.

01:29 Shh.

01:30 Shh.

01:31 Shh.

01:32 Shh.

01:33 Shh.

01:34 Shh.

01:35 Shh.

01:36 Shh.

01:37 While, while.

01:38 Yes.

01:39 Shh.

01:40 Shh.

01:41 Hello?

01:42 Hello?

As you can see, we get a pretty good summary of the conversation, but reading this after the call to assist the agent with this ticket is almost impossible due to the nature of phone calls. Half sentences, repeated words, some of the transcription (obviously) went wrong,...

Summarising the call

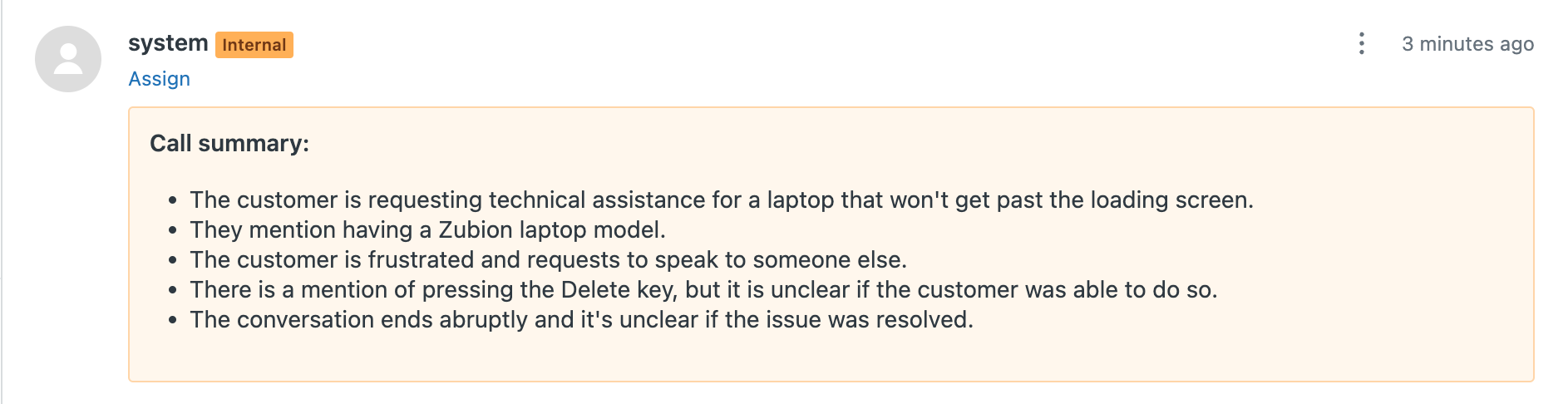

The main feature of this new AI option is a summary of the call. Once my call wrapped up, the transcript above appeared after ~10 seconds, with the summary appearing a few moments later.

And well, you can judge the results yourselves, but I find this pretty accurate!

Call summary

- The customer is requesting technical assistance for a laptop that won't get past the loading screen.

- They mention having a Zubion laptop model.

- The customer is frustrated and requests to speak to someone else.

- There is a mention of pressing the Delete key, but it is unclear if the customer was able to do so.

- The conversation ends abruptly and it's unclear if the issue was resolved.

What's next?

If you use Zendesk Talk and are thinking about buying the Advanced AI add-on, this feature seems like a no-brainer. The amount of time that can be saved this way for agents is pretty obvious, let alone the gains on reporting and insights.

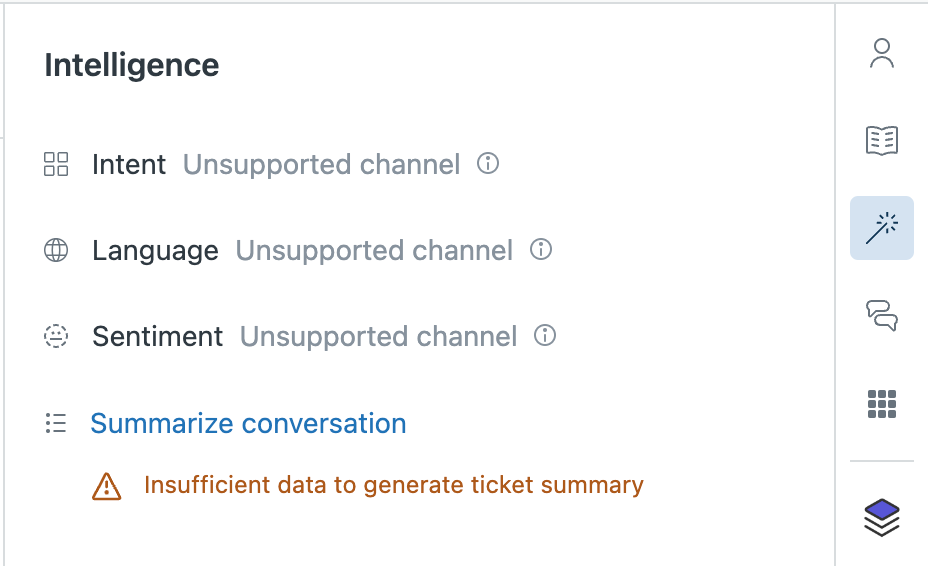

That being said, one major feature is missing currently for me, and that is the lack of integration with the other Zendesk AI and Intelligent triage features.

If you have a summary of the call, why doesn't this also feed the Summary option in the Intelligence Panel? And why can't we see the Intent or Sentiment of the call? All the data is obviously there. And if I copy the conversation transcript into a new ticket, I get the results on the right.

But, taking into account that feature is in an EAP, I'm sure this will all be nicely integrated once the feature work wraps up and the internal data gets hooked up.