Zendesk Agentic AI Agent with adaptive reasoning

At Relate 2025 Zendesk announced their new AI Agents with Adaptive Reasoning. These more powerful variants use a new third generation model that move AI Agents away from hand built flows towards more descriptive procedures. This article gives an overview of what's possible now.

At Relate 2025 Zendesk announced their new AI Agents with Adaptive Reasoning. These more powerful variants of their existing AI Agents Advanced (née Ultimate), use a new third generation model that move AI Agents away from hand built flows towards more descriptive procedures.

You might ask, 3rd gen? Well, originally Ultimate made use of pre-build conversation flows that guided the customer through a set of questions and actions towards a predefined solution. Making sure the right flow was shown to the customer was based on a custom trained AI model that turned training phrases into an intent. Variant of "where is my order?", "I didn't get my package yet", "What's the delivery time" where all used as the basis for an "Delivery Time" intent.

Over time, the hardcoded flows we build became only part of the solution. Thanks to generative AI and LLMs we could leverage a second generation of AI Agents that used generative replies to pull information from external sources to provide an answer to customer inquiries. Called uGPT, or generative replies, this second iteration of AI Agents removed the need for a lot of handwritten flows. Most questions could now be answered with generated answers, and only the more complex use cases that require conditional steps (do you want to refund or exchange the broken product?), external API calls or complex logic would still require these manually build flows.

In this second iteration of AI Agents Advanced we also moved away from custom trained Intent models towards zero-training Use Cases. We could just describe the use case, and the right flow would be triggered. E.g. "A customer inquires about the expected delivery time of their package.".

But even though we did not need to train our intent models anymore, the complex flows we trigger are still build by hand, the same way classic chatbots used to be build ten years ago.

That all changes with these new third generation AI Agents. With their new adaptive reasoning capabilities we no longer need to build a predefined flow. We can just describe the process, and our AI Agents will follow these instructions towards a resolution. These new agentic capabilities give our AI Agents the freedom to dynamically adjust the conversation based on the customers' input. If the ideal customer already provides all context in their initial message, the AI Agent will skip steps. If the customer has trouble finding an order number, the AI Agent will help them find it, and restart the process as often as needed until the criteria are met. All of this without building a manual flow with predefined steps.

Road to automation

In a previous article I used Zendesk's Agent Copilot and its procedures and actions to create a dynamic flow that would provide customers with streaming options for their favorite movies.

Agent Copilot already has a lot of these agentic capabilities too. We write a procedure, and auto-assist uses these instructions to guide an agent without every step needing to be strictly written down in exact steps and responses.

Looking at my previous article, we can see that Agent Copilot provides a lot of benefits for our agents. It looks up information, provides pre-written replies and resolves the ticket and shortens the resolution time of our request significantly.

But actually, when we look at this process, there’s actually no reasons for our human agents to be involved. Every step of this process can happen automatically without human interaction.

So instead of forwarding these requests to our agents as tickets, we can leverage an AI Agent and fully automate this process. Customers get their answer faster, we reduce the workload of our agents, while having zero impact on CSAT (it might even go up, cause who wants to wait for an agent to let them know streaming info).

This reflex of converting manual steps to partially automated steps to fully automated flows is the approach you should take to go from 0 up to 80% automation as part of the Road to Automation. And shifting responsibility from human agents to Agent Copilot to AI Agents is one approach to increase your automation rate.

Example flow

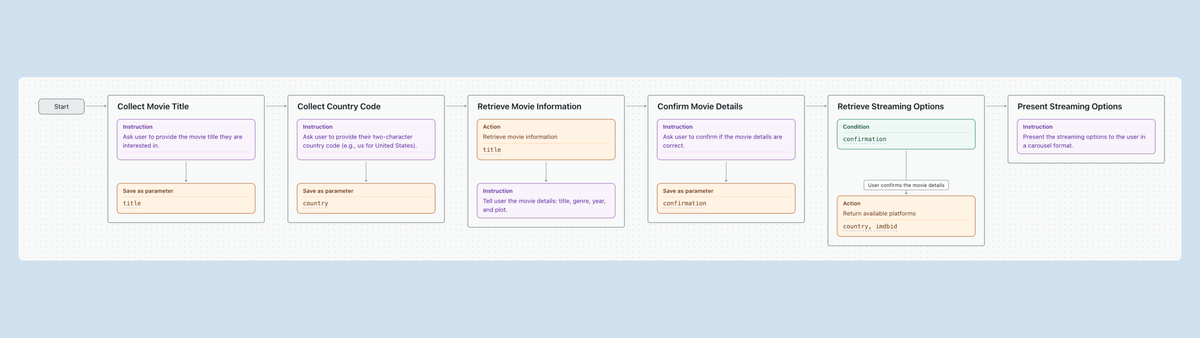

So what would happen if we convert our ticketing flow powered by Agent Copilot into a use cases powered by an AI Agent?

- Our AI Agent detects our use case - a customer is interested in streaming options for a movie.

- It asks the customer for a movie title and two-character country code (with validation)

- We retrieve a potential match for the movie and validate the choice with the customer.

- Once approved, we retrieve a list of streaming options.

- We list the options to the customer.

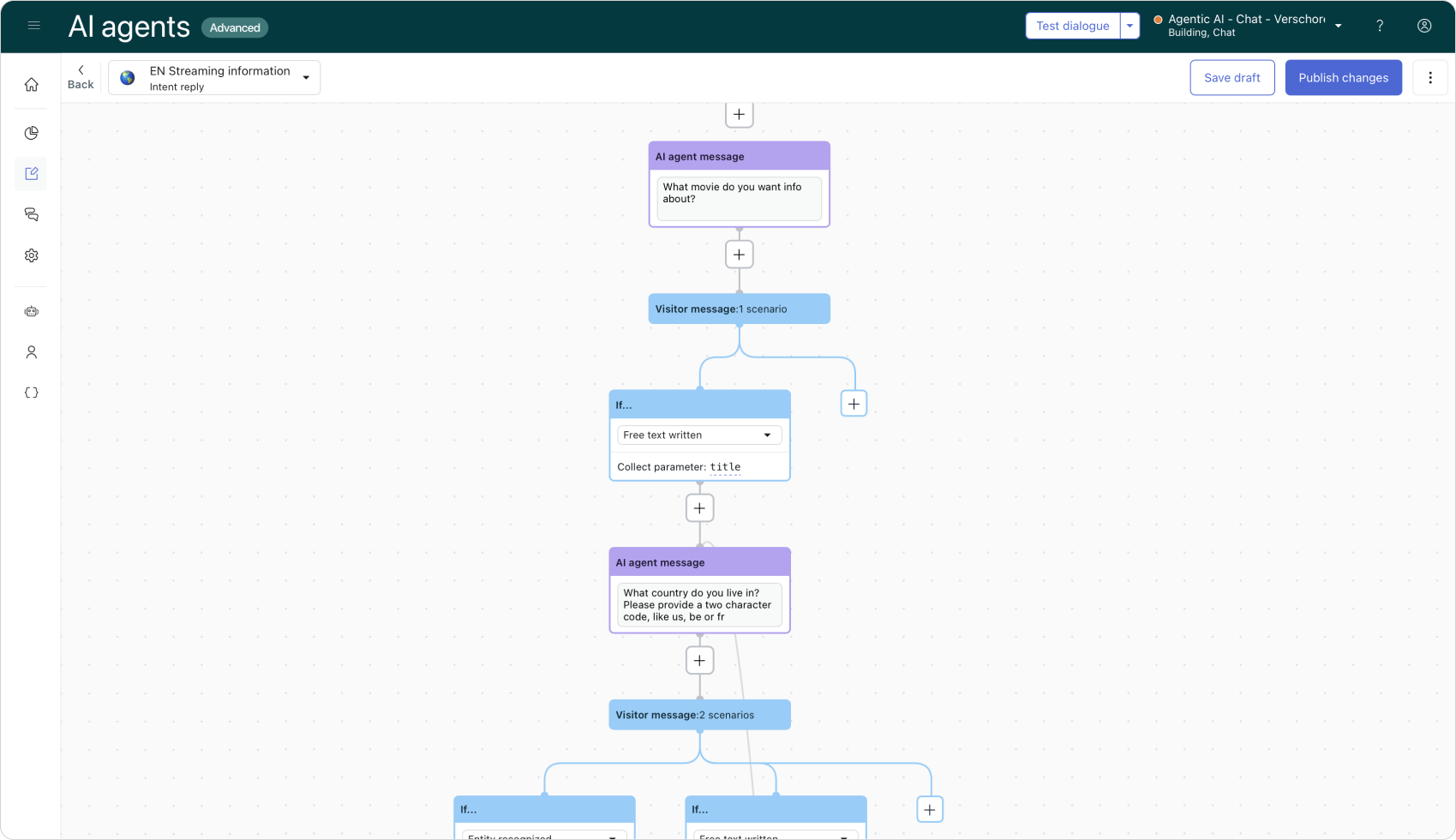

Reply with a dialog flow

This setup might seem like a simple flow, but there's a lot of caveats here. We need to account for invalid movie titles. We need to regex check our country code with a custom entity. We need to account for non-existing movies. We need to account for zero streaming options. And so on.

At any time the customer could try to "escape" the conversation and ask for another movie, or jump to another question. Building this out in the classic dialog builder takes a lot of effort and experience. Just the first option of asking for the information alone is 4-8 different building blocks if you account for all failing scenarios

So, let's see how the new Agentic AI Agent with procedures handles this, shall we?

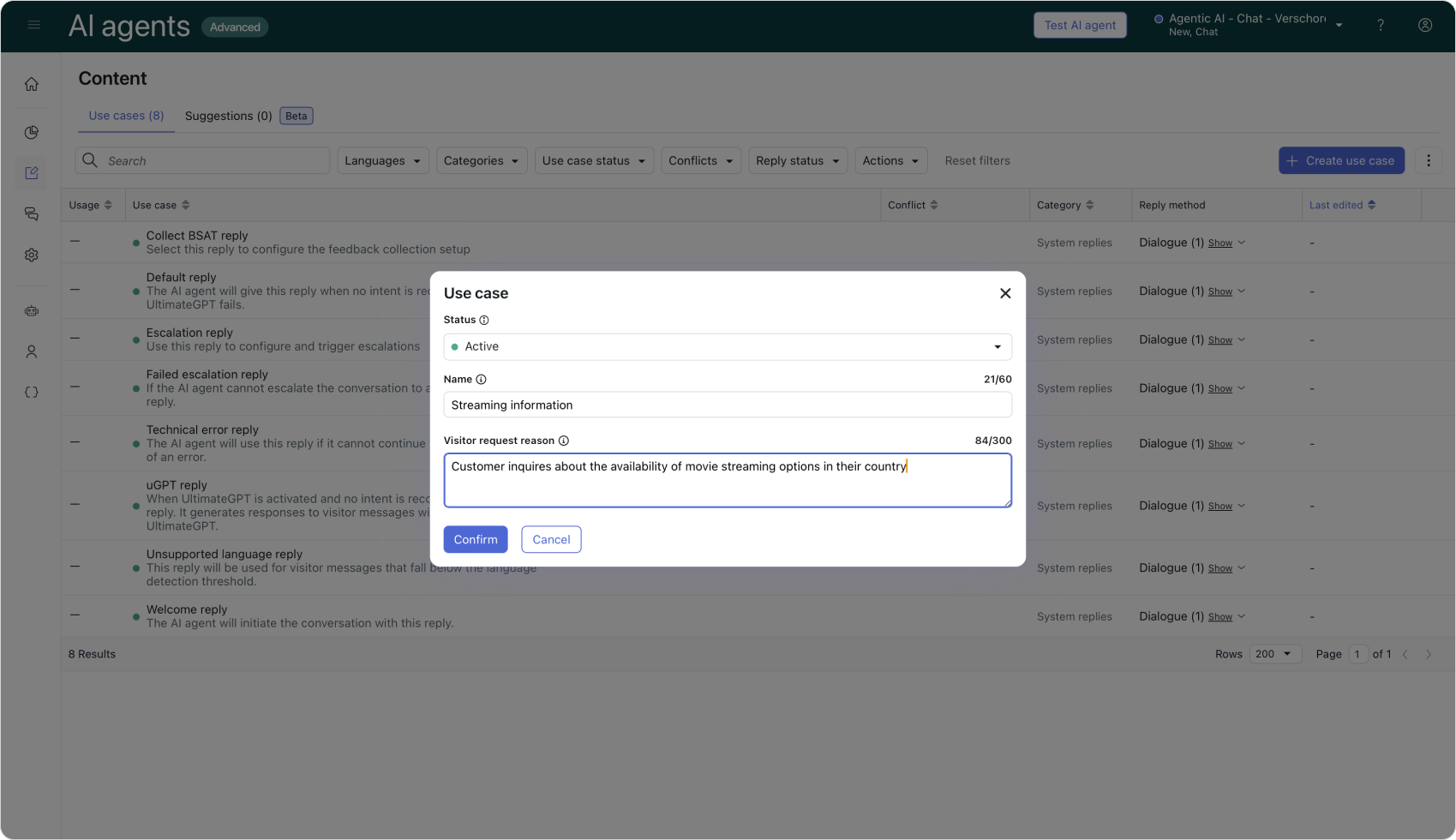

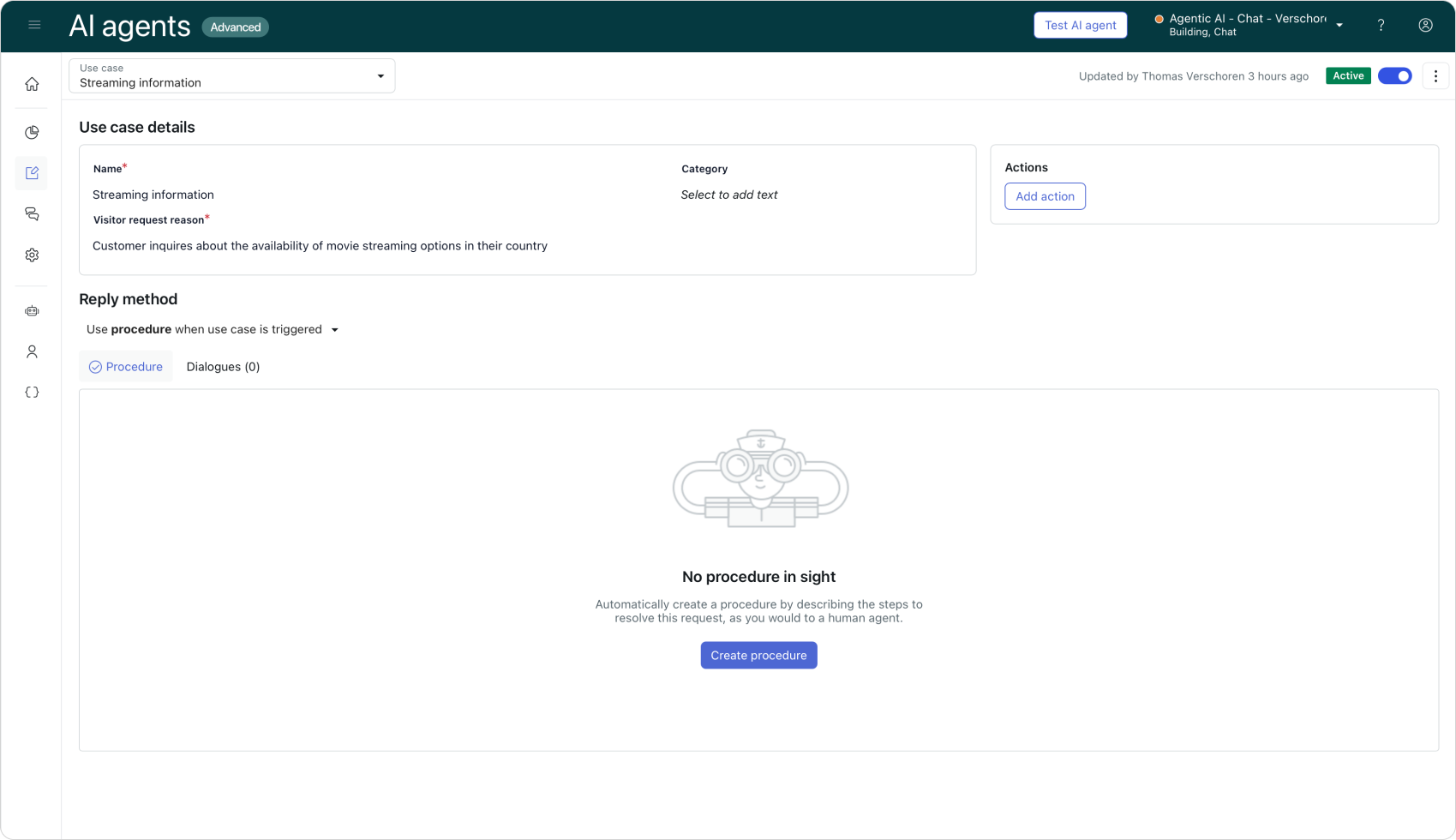

Use Case

We first need to define our use case. We call our use case "Streaming Information" and give it a description of "Customer inquires about the availability of movie streaming options in their country"

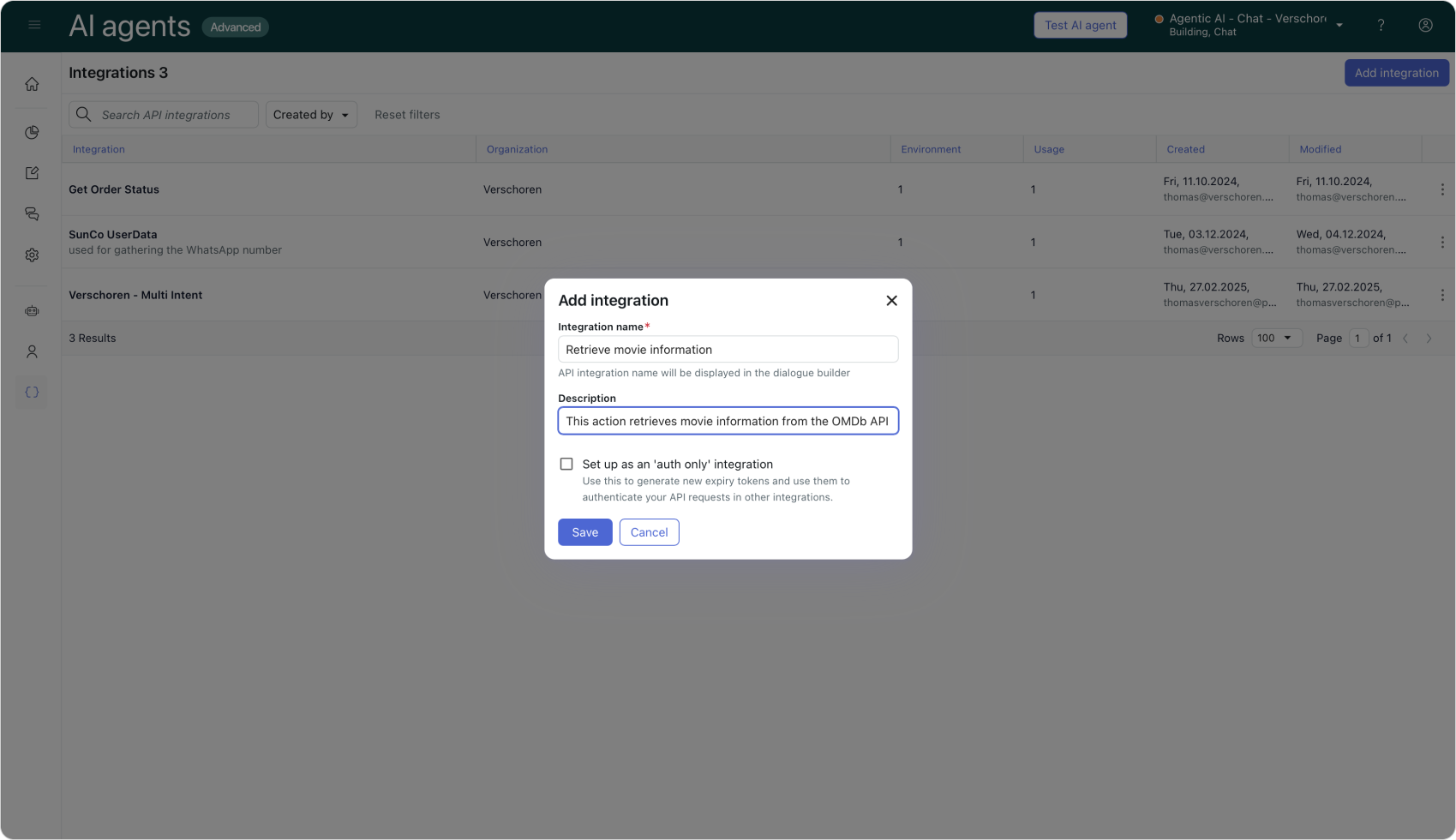

API Integrations

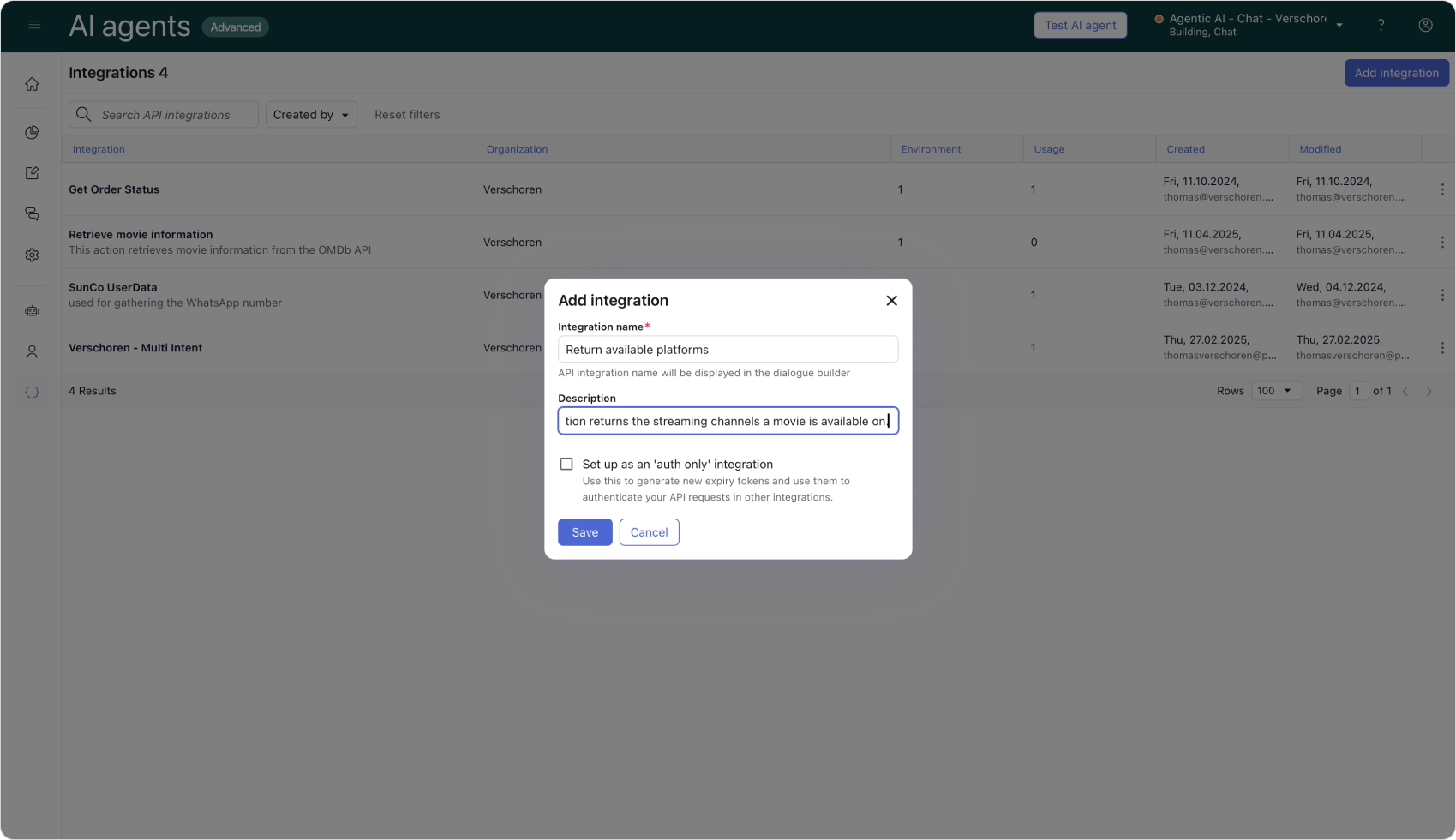

Next up we need to add two API integrations to our AI Agent. These are configured similarly to the previous Agent Copilot example and are used to retrieve movie details, and subsequently to retrieve streaming information for a chosen movie.

I understand where this originates from though. AI Agent runs on Ultimate's old platform, whereas Agent Copilot lives on Zendesk proper. Time will surely fix this inconvenience.

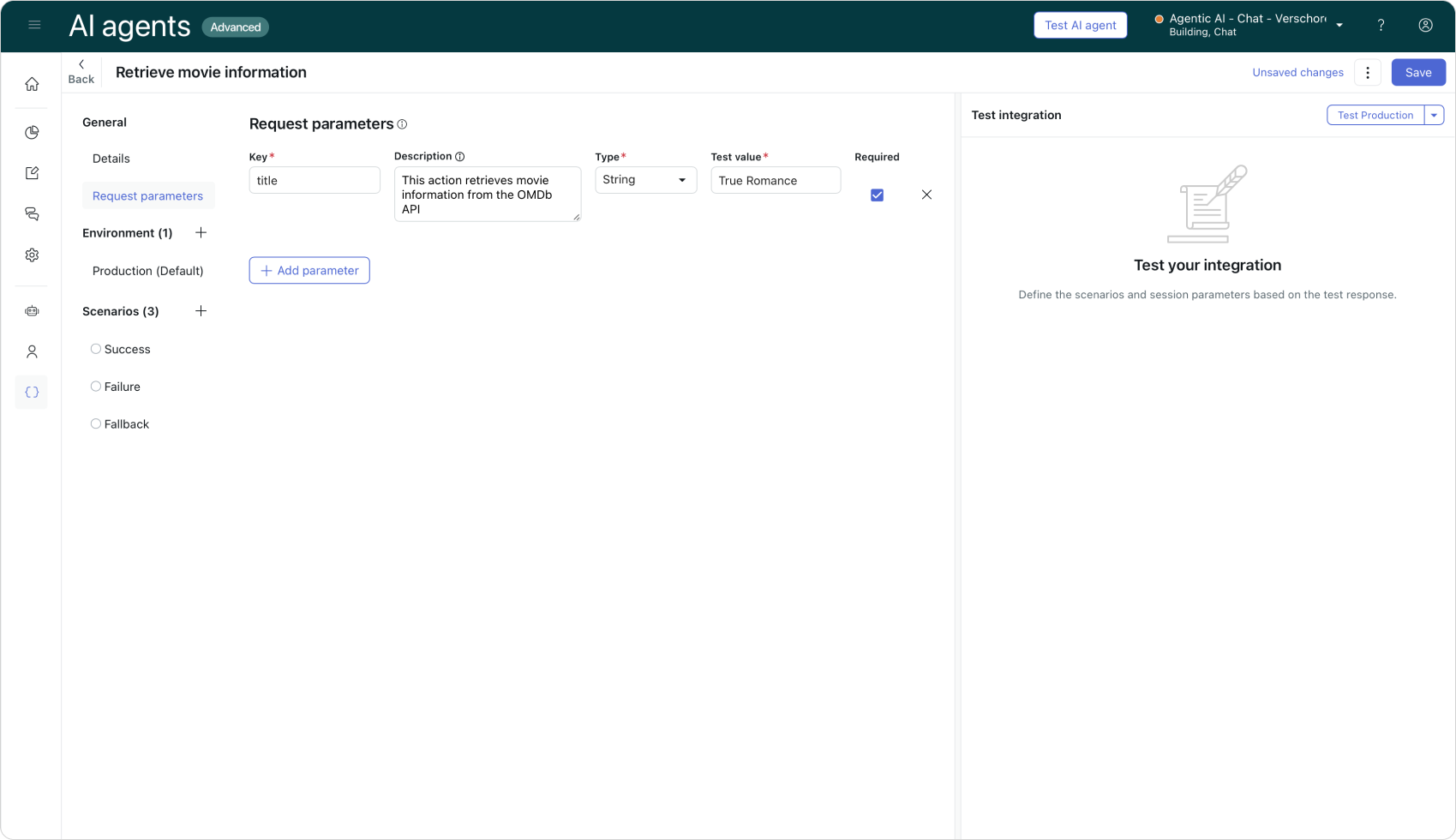

Get movie information

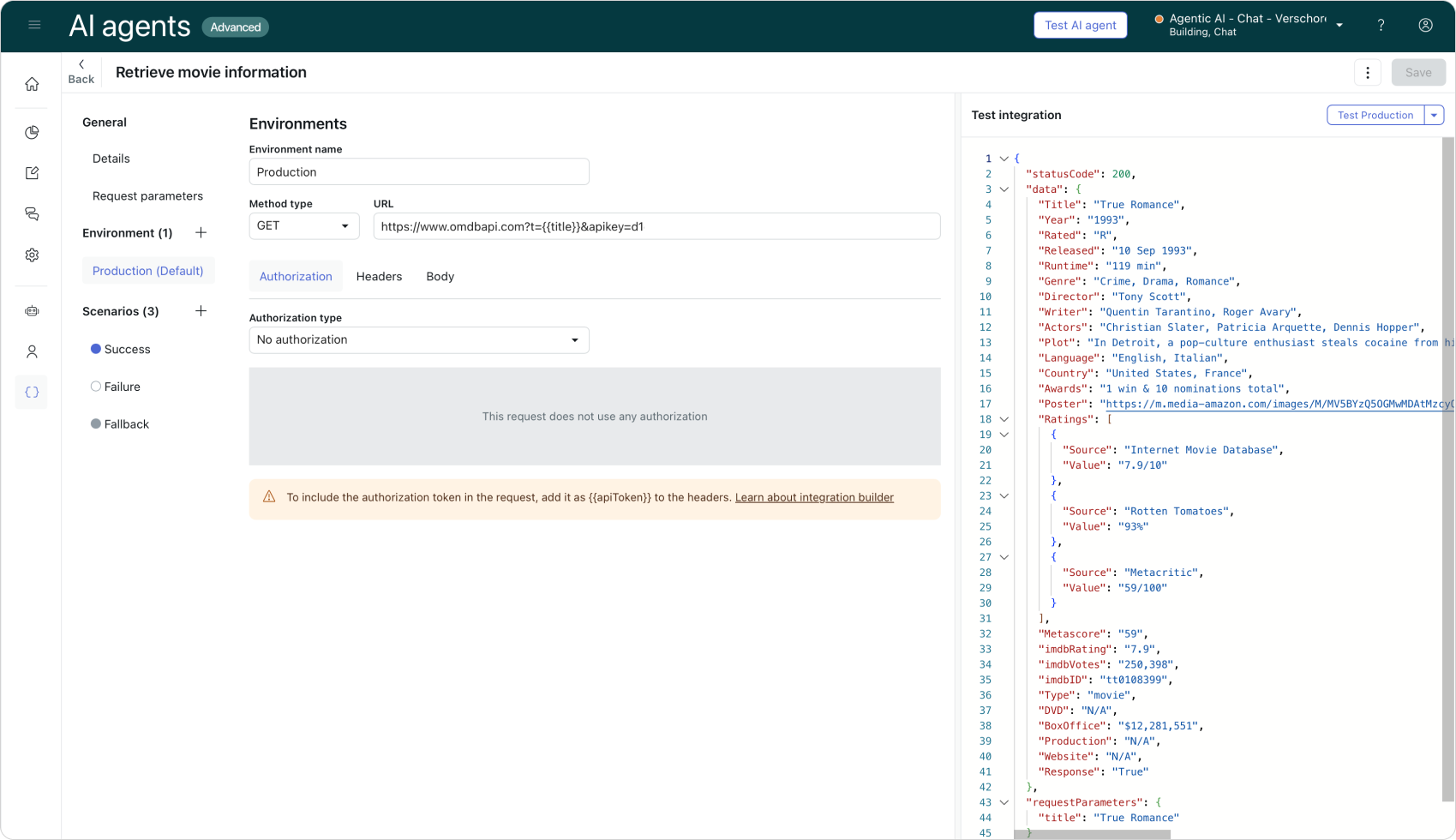

For this first API we setup an API call towards the OMDB API to search for a movie that matches our title.

We can define title as an input parameter of type string in the API configurator.

Setting up the OMDB API

We can configure our API call itself in the Production section, and test it out.

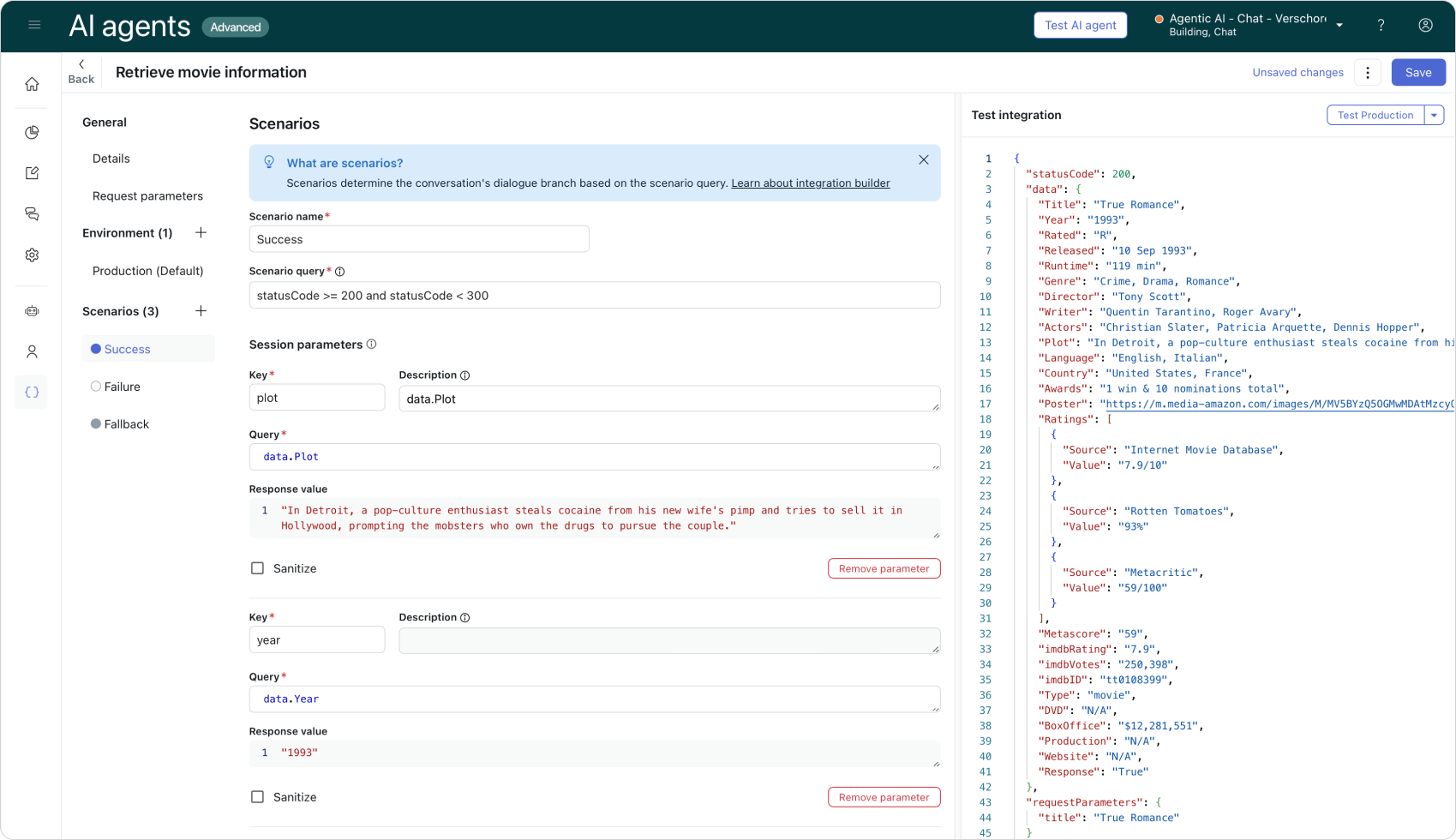

Once configured we can test our API call. If successful, we can use the Success parameters section of the API configurator to create output variables for our API.

In our scenario we need the title, plot, year , genre and imdbID values, which live in our response data under the data element.

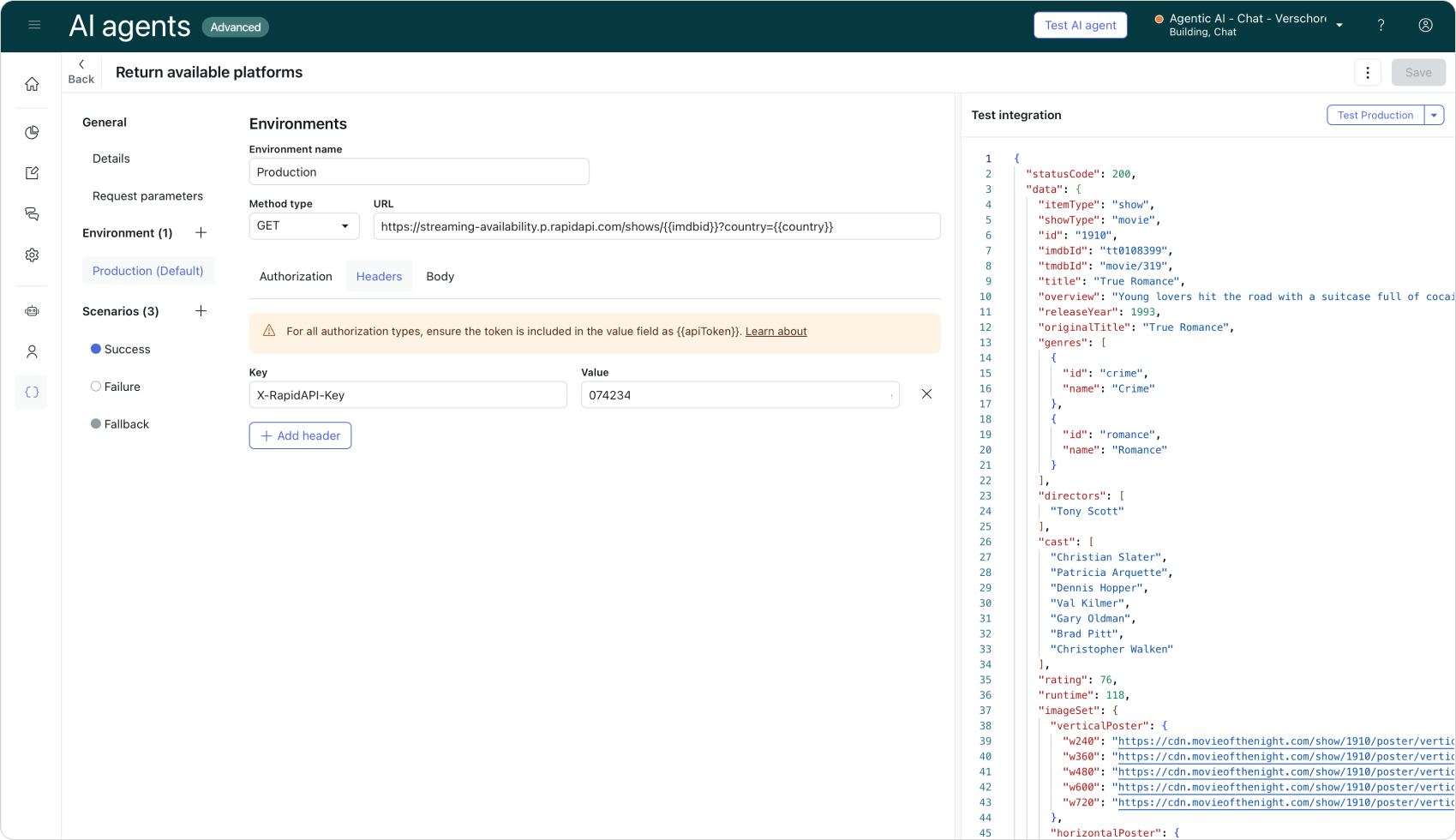

Get streaming options

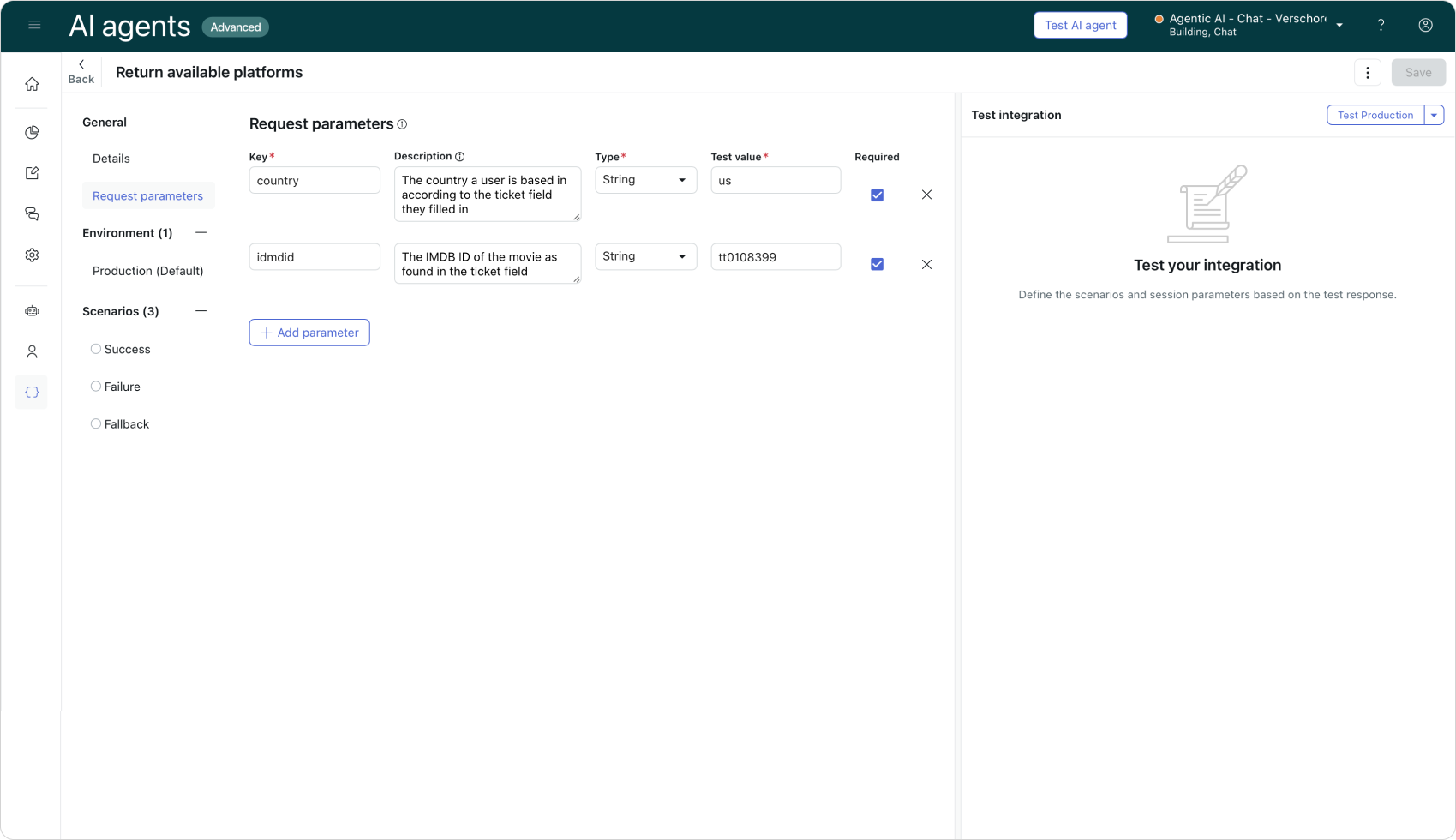

Our second API calls the Streaming Availability API to return streaming options for a given imdbID and country. We can define both of these once again as input parameters for our API.

Our second API call uses the imdbID we setup in the first API call as an input parameter

This second API call once again uses the input parameters as {{variables}} in the URL. Note that we do need to use an authentication header for this one.

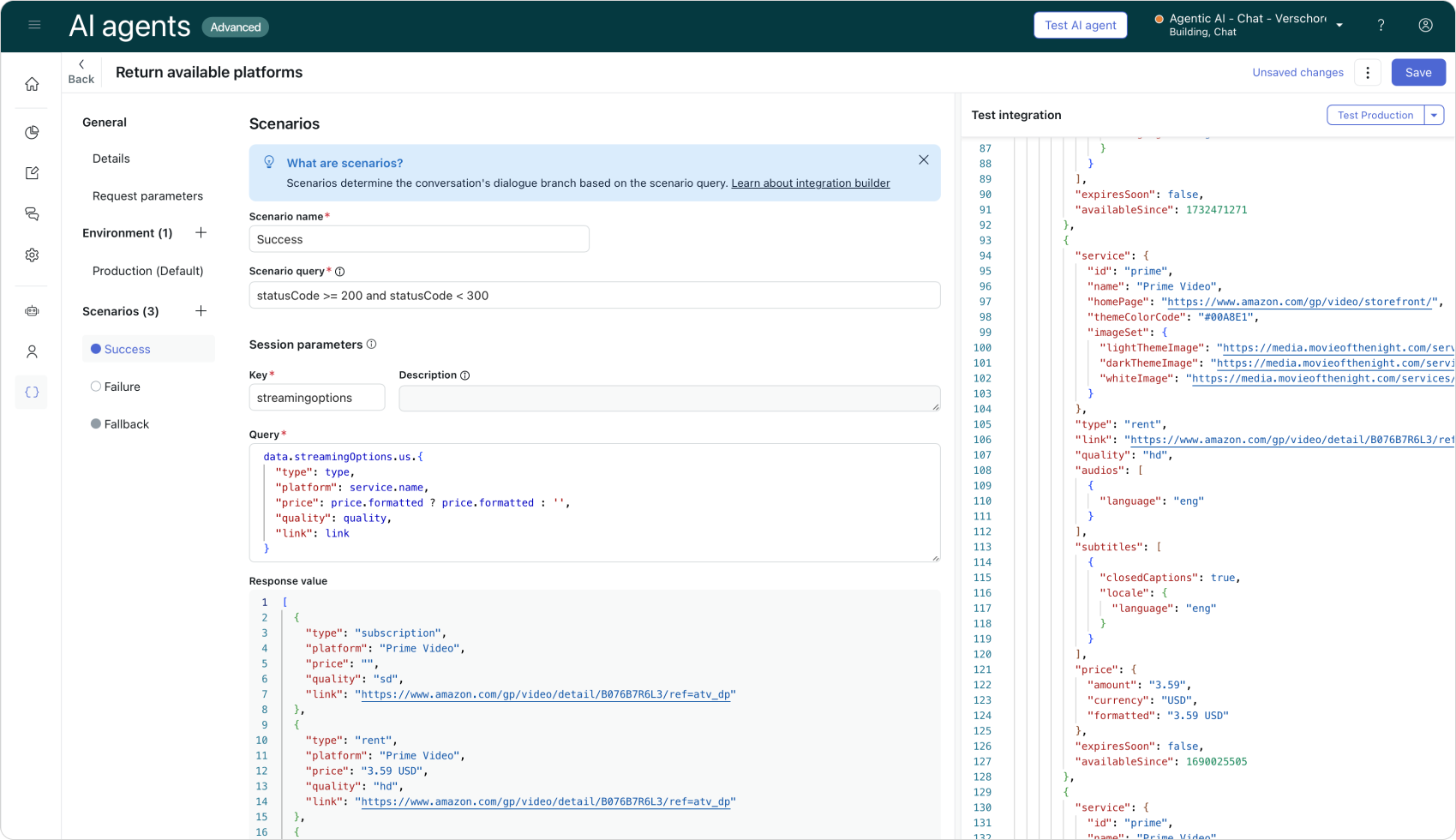

Our output parameters do require some additional work though. If we look out the raw API output and set our streamingOptions output to data.streamingOptions.us , we get a complex JSON object with nested keys.

{

"service": {

"id": "prime",

"name": "Prime Video",

"homePage": "https://www.amazon.com/gp/video/storefront/",

"themeColorCode": "#00A8E1",

},

"type": "subscription",

"link": "https://www.amazon.com/gp/video/detail/B076B7R6L3/ref=atv_dp",

"quality": "sd",

"audios": [

{

"language": "eng"

}

],

"subtitles": [

{

"closedCaptions": true,

"locale": {

"language": "eng"

}

}

],

"expiresSoon": false,

"availableSince": 1732471271

}Where Agent Copilot could just read this object and render nice output, we need to give our AI Agent a little bit of additional help to parse and retrieve the right elements. These new AI Agents are currently in EAP, so I'll revisit this step in the future to see if newer releases can handle this complex object better.

Either way, instead of just storing the raw streamingOptions object, we can use JSONata to parse and convert the original object to a more readable structure:

data.streamingOptions.us.{

"type": type,

"platform": service.name,

"price": price.formatted ? price.formatted : '',

"quality": quality,

"link": link,

"image": service.imageSet.lightThemeImage

}Our JSONata code

us in the JSONata code. If anyone knows how to add a dynamic input variable here, please reach out!Which results in this cleaner data to use in our flows:

[

{

"type": "subscription",

"platform": "Prime Video",

"price": "",

"quality": "sd",

"link": "https://www.amazon.com/gp/video/detail/B076B7R6L3/ref=atv_dp",

"image": "https://media.movieofthenight.com/services/prime/logo-light-theme.svg"

},

{

"type": "rent",

"platform": "Prime Video",

"price": "3.59 USD",

"quality": "hd",

"link": "https://www.amazon.com/gp/video/detail/B076B7R6L3/ref=atv_dp",

"image": "https://media.movieofthenight.com/services/prime/logo-light-theme.svg"

}

]The cleaned up and restructured output

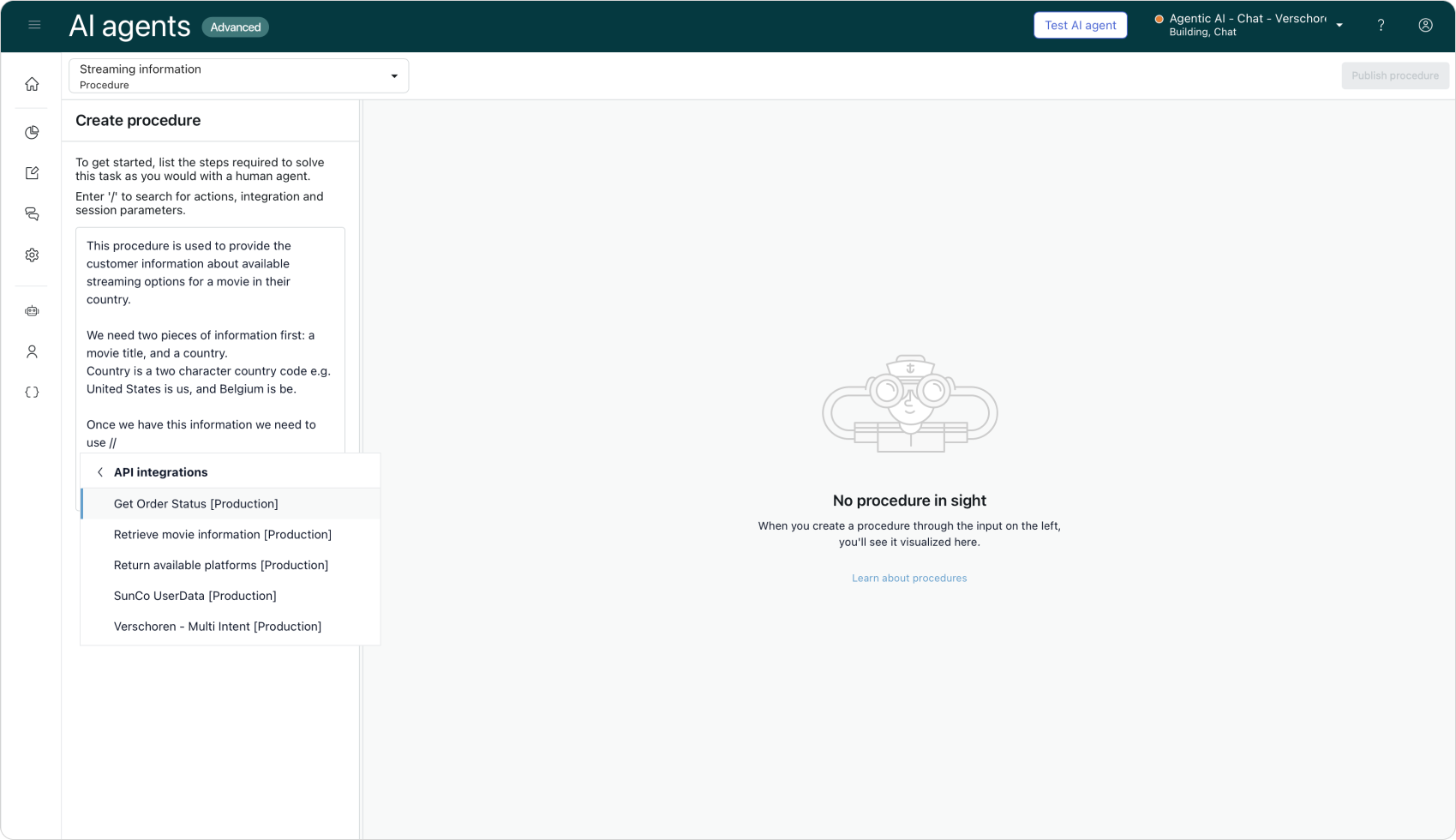

Procedure

Before the new Adaptive Reasoning release, the next step would be building out a complex dialog flow to guide the customer through the different steps of our use case.

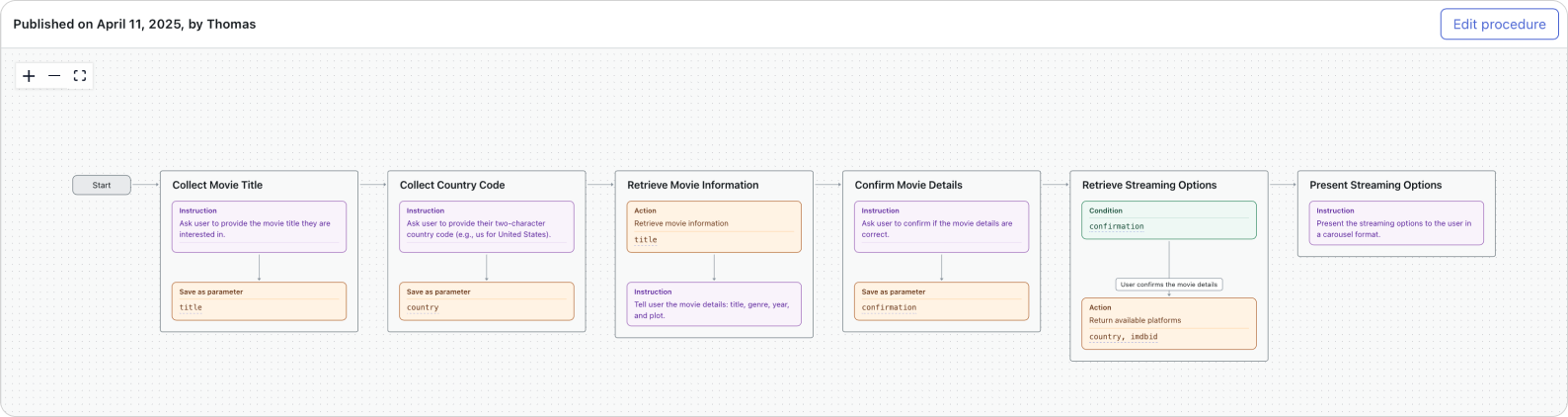

But thanks to this new release, we can replace all that work with a written procedure instead. Our written procedure looks like this:

This procedure is used to provide the customer information about available streaming options for a movie in their country.

We need two pieces of information first: a movie title, and a country.

Country is a two character country code e.g. United States is us, and Belgium is be.

Once we have this information we need to use{{Retrieve movie information [Production]}}to retrieve a potential match for the movie title. We return the movie title, genre, year and plot to the customer.

If they confirm it's the right movie we then use{{Return available platforms [Production]}}to return a carousel of available streaming options. The carousel contains the platform, type (rental or buy), optional cost and a button that links to the service.

Once saved, Zendesk turns this procedure into a flow with all the required steps. And similar to our Agent Copilot procedures we can link to our API integrations within our instructions to tell the AI Agent to execute these actions.

That's it. No building. No blocks or steps or conditionals. And if we notice our AI Agent doesn't work exactly as expected, we can rewrite a part of our procedure and the generated flow will automatically adapt.

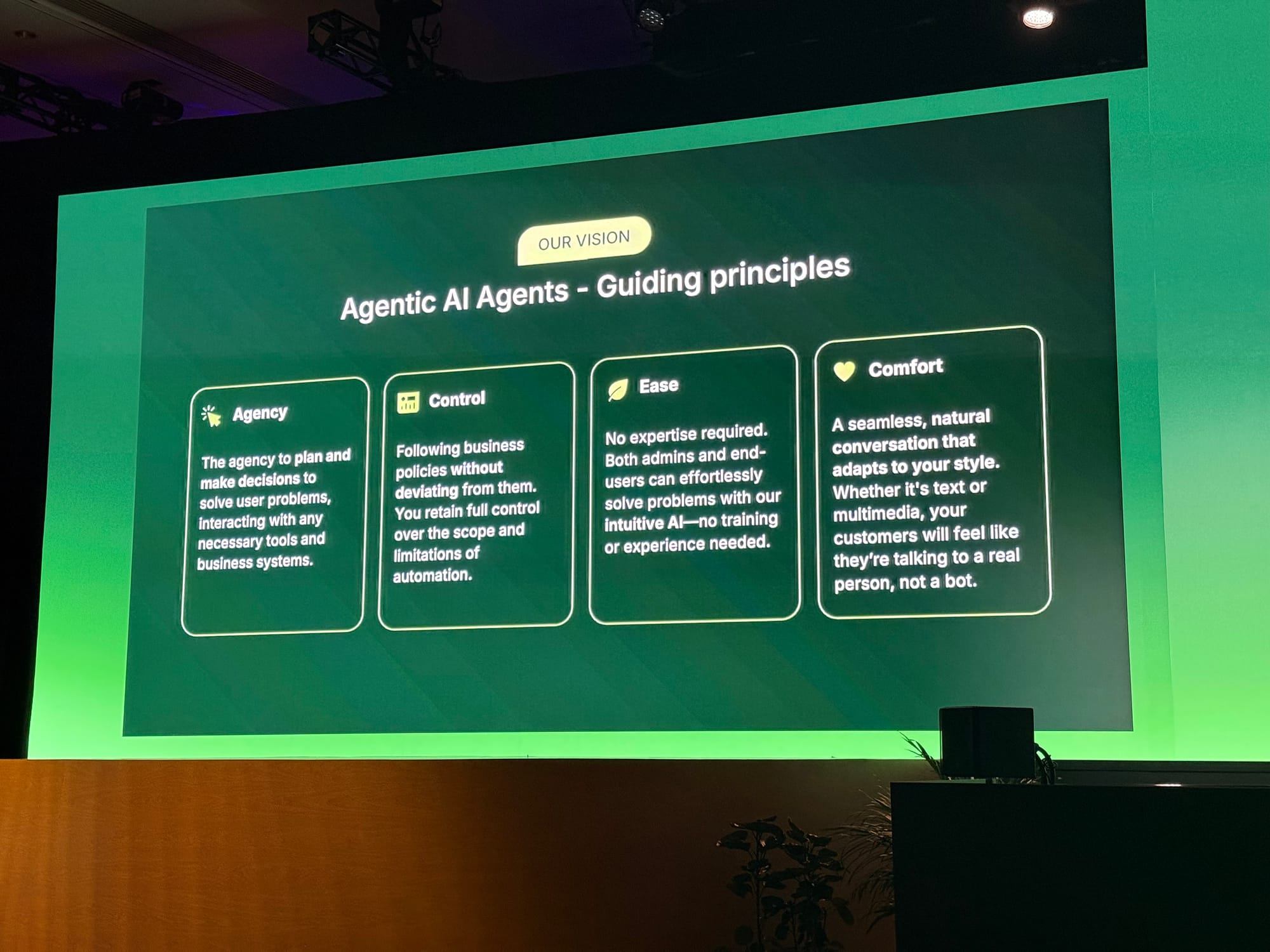

When looking at the generated flow you might expect the AI Agent to strictly follow this flow similar to the classic dialog builder flows. However, the generated flows just describes the reasoning of our AI Agent. But the agent can gracefully move across this logic. If a customer provides a lot of information up front, the AI Agent can skip the collection steps. Similarly, of a customer halfway through the flow changes their mind, the AI Agent will go back a few steps and resume the procedure at a logical point (e.g. if the customer changes is mind and wants another movie).

This reasoning and freedom – or agency– makes the conversation and experience for the customer feel a lot more natural, and makes our AI agents a lot more powerful.

Customer Experience

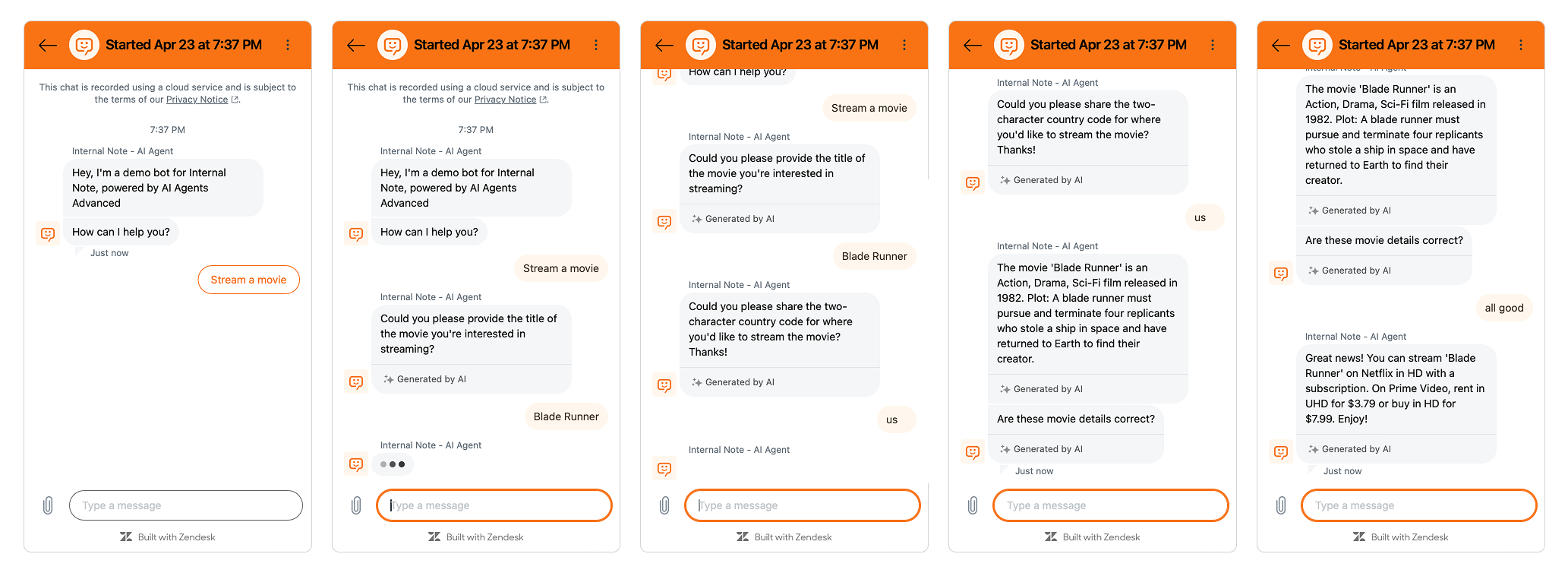

Now, let's see how this works for real. We start our conversation by asking

I'd like to stream a movie

The AI Agent picks up the use case, and asks for a title.

We then show the customer information about their chosen movie, and if they confirm it's the right one, we lookup and return a list of streaming options for them.

Now, what would happen if our customer starts the flow with asking for streaming info for a specific movie? Or what if they change their mind in the middle of the flow?

As expected, our AI Agent gracefully handles these scenarios and either picks up the title immediately, or adapts to the new movie title and uses that one instead without fully restarting the flow. Nice.

Shortcomings

You might notice that the results in the last step are rendered differently in both examples. This is a result of the Agentic nature of our AI Agent. With the new procedures comes a certain set of liberties and freedom that our AI Agent gets. It's that freedom that makes it adaptable to different scenarios, and allows it to jump around in a procedure to give the customer the best experience.

But where Agency means freedom, we can also add some controls to our AI Agent that gives it certain boundaries and rules it can never break. These controls can either be refinements in our Procedure – always add links to the list of streaming options – or can be Instructions we add to our AI Agent.

Instructions live across all use cases and can define things like:

- never tell a customer something is free, please use included instead.

- where possible always movie, and never use flick.

- never offer a customer streaming options for The Phantom Menace. There's better Star Wars movies! 🤪

At Relate Zendesk also announced more fine-grained controls for AI Agents, but I haven't been able to play around with these new capabilities yet.

Conclusion

So, there you have it. An example procedure of Zendesk's new Agentic AI Agents with adaptive reasoning capabilities. It combines use cases, procedures and external API calls into dynamic conversations with your customers that adapt to their specific questions while following your instructions.

It removes the need for manually building flows, and lowers the deployment time of your AI Agents significantly. Building the entire example in this article took me just one evening!

Similar to Agent Copilot it's important to note that here to your processes are key. If you know how you handle a refund, if you know where you can retrieve order information, and if you know what's allowed for a customer to self-service, and where escalation is needed, setting these new AI Agents up is a breeze.

However, if you don't know your processes, I strongly advise you to first write down HOW you want to work, before you start building. It'll make this process a lot easier.