AI Summit - the new Zendesk Voice

In this last article in my AI Summit series I deep-dive in the new Zendesk Voice with its new AI Agent capabilities, Agent Copilot for Voice and Voice QA.

Welcome to this fourth, and final, installment in my AI Summit series, discussing all the new announcements for the Zendesk platform. If you missed the previous issues, you can find them here.

When you look at the Zendesk Suite overall there’s a plenty of of elements that have gotten a lot of attention these last few years. Messaging, Bots, Agent Workspace all got major new features and designs. These changes impact the way Zendesk works in major ways –

The move to messaging turned direct web chat into an asynchronous channel that offers better self service solutions via bots and generative replies. It expanded the traditional chat channel to both web, mobile and social channels and make sure your customers can reach you from anywhere.

Similar, the new Agent Workspace with its omnichannel routing, agent home and context panels give agents a rich environment to handle tickets with context, automations and suggested replies to make ticking handling faster than ever before.

Even Zendesk Guide received some love with a new article editor, generative replies, tone shift and simplify AI features and – soon – support for Custom Objects, cross-brand articles and many more updates.

If you know you’re Zendesk Suite, you know where this is leading. One piece of Zendesk has always felt like the one piece of Duplo in a box full of Lego. And that’s Zendesk Talk. It’s Zendesk built-in voice offering that allows you to receive calls within Zendesk, route calls via a traditional “press 1” IVR to the right group, and it gives you some basic call recording and dashboard features.

But, compared to powerful third party voice solutions like Babelforce, Aircall and others, Zendesk Talk felt like Duplo to me. Yes you can built cool buildings with it, but if you want details, more colors and cooler constructions, you should move to Lego. The same goes for Talk. It works. But it’s crude compared to more modern offerings.

Introducing, the new Zendesk Voice

The relaunch of native voice with end-to-end Al across the entire call journey

At the AI Summit Zendesk mentioned the new Zendesk Voice features spread across the AI Agent, Agent Copilot and QA sections of the talk. Combining all new features together, it’s a complete upgrade of the Talk we know, with powerful new additions that will, later this year, bring it into this AI powered age of CX.

The product announcements spread across 5 elements:

- Native voice upgrades

- Al agents for voice, powered by PolyAl (Q4-24)

- Copilot for voice (Q1-25)

- Post-call transcripts & summaries

- Voice QA for native voice + partners

Coincidentally this aligns nicely with the existing solutions available for text based channels like Messaging and email. Each part of the call journey gets improved, and where possible empowered with AI.

Before we dive in, first some context. Even though conversational interactions and chatbots are all the hype these days, most existing customer interactions still happen over – so called – traditional channels like email and voice. So when you think about automating not only your frontline but also assisting agents, improving automation and deflection rates for voice calls is an important factor.

Incoming Calls - Al agents for Voice

If we go over the announcements in the order of a normal call journey we need to start with how an incoming call is handled.

Traditionally, for voice channels, this means a prerecorded message welcome in the customer, an announcement that the call may be recorded, and than an IVR asking the customer to press a series of buttons to reach the right team.

IVRs, similar to traditional flow based chatbots, are not that great. A customer makes a guess at each step of the route, hoping to end up with the right team, while fearing they will get disconnected or reach a dead-end with an automated “resolution”.

Similar to how these flows were improved (or fixed) with new automation flows powered by intents and generated replies for messaging, the new AI agents for Voice will remove the needs for static IVRs and turn conversations into dynamic and automated flows.

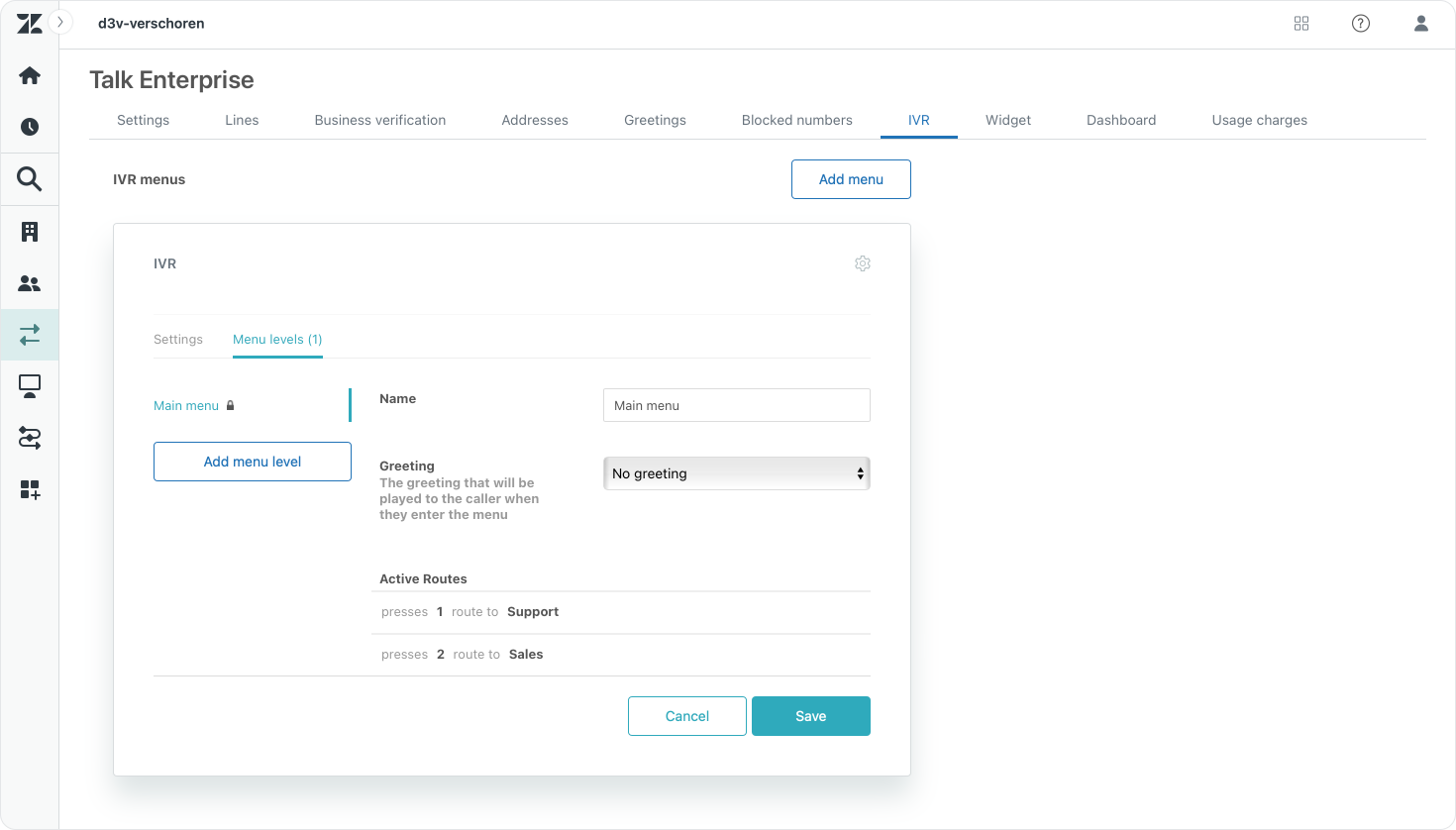

When a customers calls your number they’l be greeted with an automated response that asks them why they’re calling. The AI Agent then processes the response and can then do a couple of things to help the customer or agent.

For all inquiries the system can result indexed Help Center articles, websites and other product information to generate a spoken response to the customer. For more complex inquiries like an order status the AI Agent can ask the customer for an order number and then request the information from your order management platform over API. Similarly, when a customer wishes to book a table or change a reservation, the AI agent can ask for the required date, group size and preferences and execute the booking directly within the call.

And for scenario’s where we do need your actual human agents to take over, we can use this AI Agent the same way we can do it for messaging conversation: we can ask the right questions up front so the agent immediately has the customer id, order number or product type at hand when the call gets transferred.

So far this all seems like a great solution for a problematic channel. Voice, as opposed to email and messaging is a real 1:1 channel. Where an agent might be able to handle 2-3 messaging conversations at once, and can handle dozens of email threads due to the real asynchronous behavior of those channels, a voice call is live. You’re talking to the agent now and you can’t just put them on hold to talk to another customer at the same time.

So the only way to lower waiting times for customers is to either lower the amount of calls coming in, or by reducing the duration of each call, allowing an agent to handle more customers in a shift.

With AI-powered voice assistants, over 50% of inbound calls are resolved autonomously, significantly reducing the load on human agents.

Both scenarios can be handled by those new AI agents for Voice. By deflecting conversations with self-service solutions like generated responses or flows that get integrate with backend systems and automate processes, you can lower the amount of calls that require an escalation to an agent. And by collecting context, you can make sure the agent can dive right in, instead of asking first for the what, who, why. This additional context is stored in the new custom entities for Zendesk Intelligent triage.

Combining this with a real omnichannel strategy where you also try to push customers to other channels like messaging or email, gets you to a point where your voice channel can really shine for those complex use cases where its often needed.

PolyAI

Personally I was surprised when I heard Zendesk had built a new Voice automation solution. All their recent purchases in the last years where focusing on intent models (Cleverly.ai) or ticket automation (Ultimate.ai), and the fact that the Zendesk Talk stack is largely based on Twilio behind the scenes doesn’t make it prime for in-house AI developments on voice. Or at least, not to my limited knowledge of how the Zendesk product teams operate.

So, when they announced at the AI Summit that this new AI Agent for Voice was to be powered by PolyAI, a leading voice automation platform, it kinda makes the above logical again. Zendesk invested in PolyAI earlier this year, so a deeper partnership between the two companies is kinda logical. Zendesk gets a powerful new partnership that turns their voice offering to eleven, and PolyAI gets access to a big established user base that is used to the concept of AI Agents and already pays for a voice channel as part of the Zendesk Suite offering.

This does turn AI agents for Voice into another add-on though, similar to how the Advanced AI add-on adds more capabilities to Agent Workspace, and Ultimate replaces the native AI Agent solution with a more powerful solution.

The only thing that’s not really clear right now is how this AI agent for Voice will be offered. Will this be a product on Zendesk paper – a Voice Bot-add-on – or will this be a tight integration with PolyAI – to be billed separately – time will tell.

Escalation - Intelligent Routing

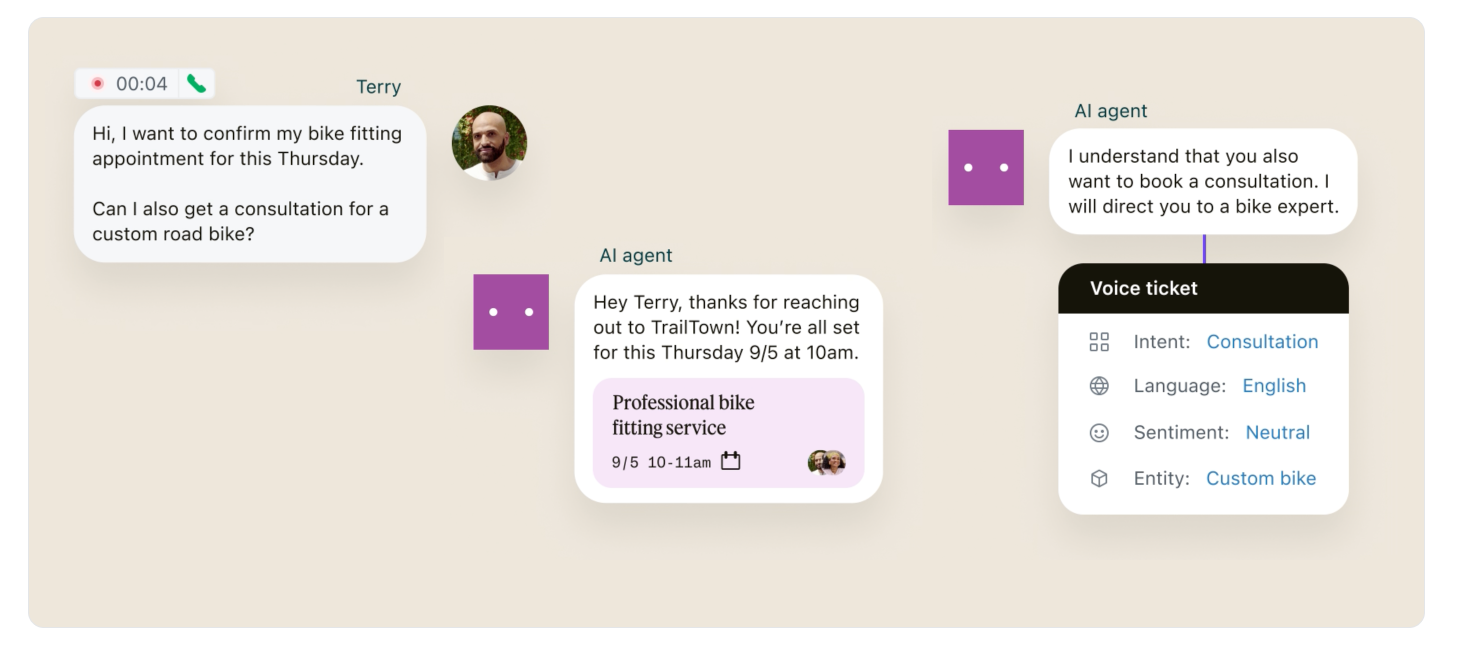

When the AI Agent can’t resolve the incoming call itself, it’ll need to escalate the call to an agent.

With Zendesk Talk this means setting up routing to a specific group of agents within Zendesk based on the number called, or a specific IVR choice. Calls are put in a queue and the first available agent in a group (or the first one that picks up the call) handles the conversation.

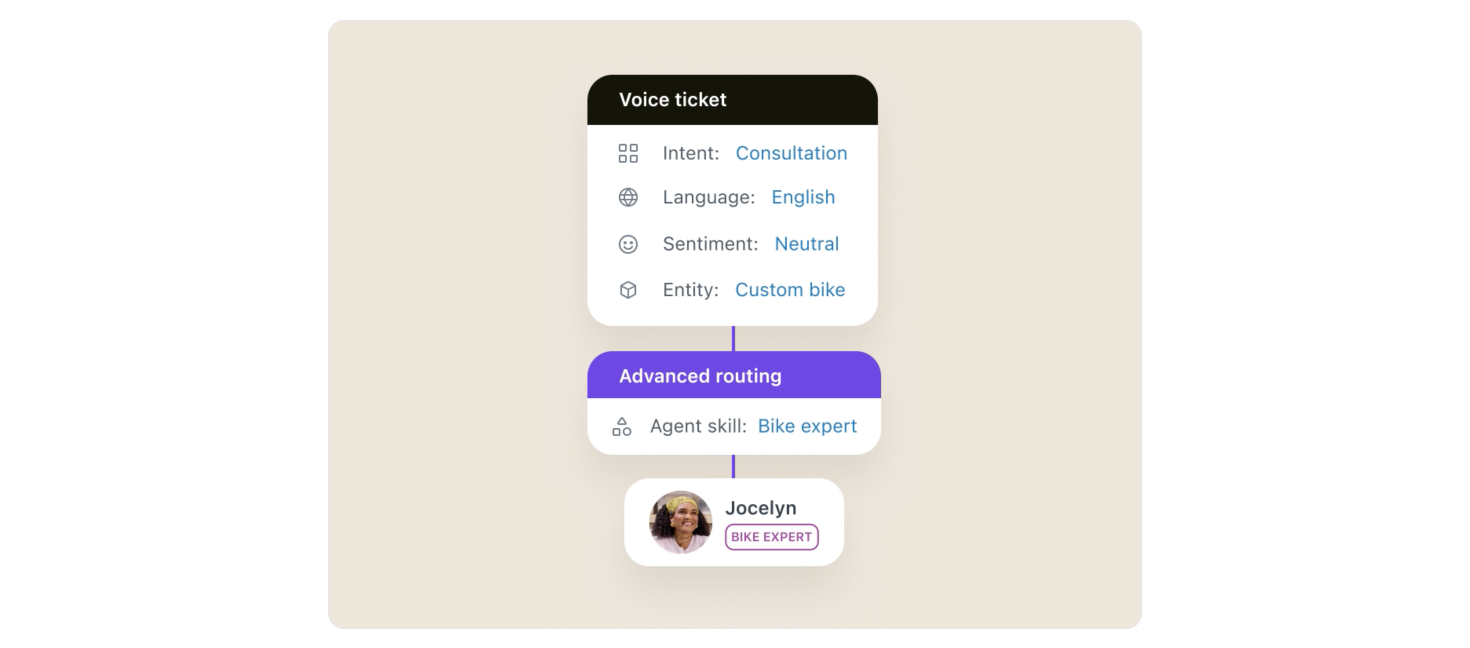

But similar to how messaging and email are moving from group-assignment towards agent-routing with assignment based on skills and availability, the new Zendesk Voice will do the same. While the AI Agent is handling the conversation, it’ll also log key elements like intent, sentiment and language, and this information is used to route a call to the right agent when an escalation is needed. This assignment will be based on the same skills and availability settings that are now available for other channels like messaging.

As for how this routing works, from what I was able to piece together from Zendesk documentation, basic omnichannel routing will be possible based on tags in the IVR (and thus skills).

Deeper routing based on intents and sentiment can be achieved by using Advanced AI, or you can leverage AI Agent for voice and inherit the intent from its initial conversation with the customer.

There's lots of moving pieces in this, so you can be sure, once I get access to everything, I'll do a big Omnichannel routing for Voice update!

During the Call

Sentiment & Intent and summarization

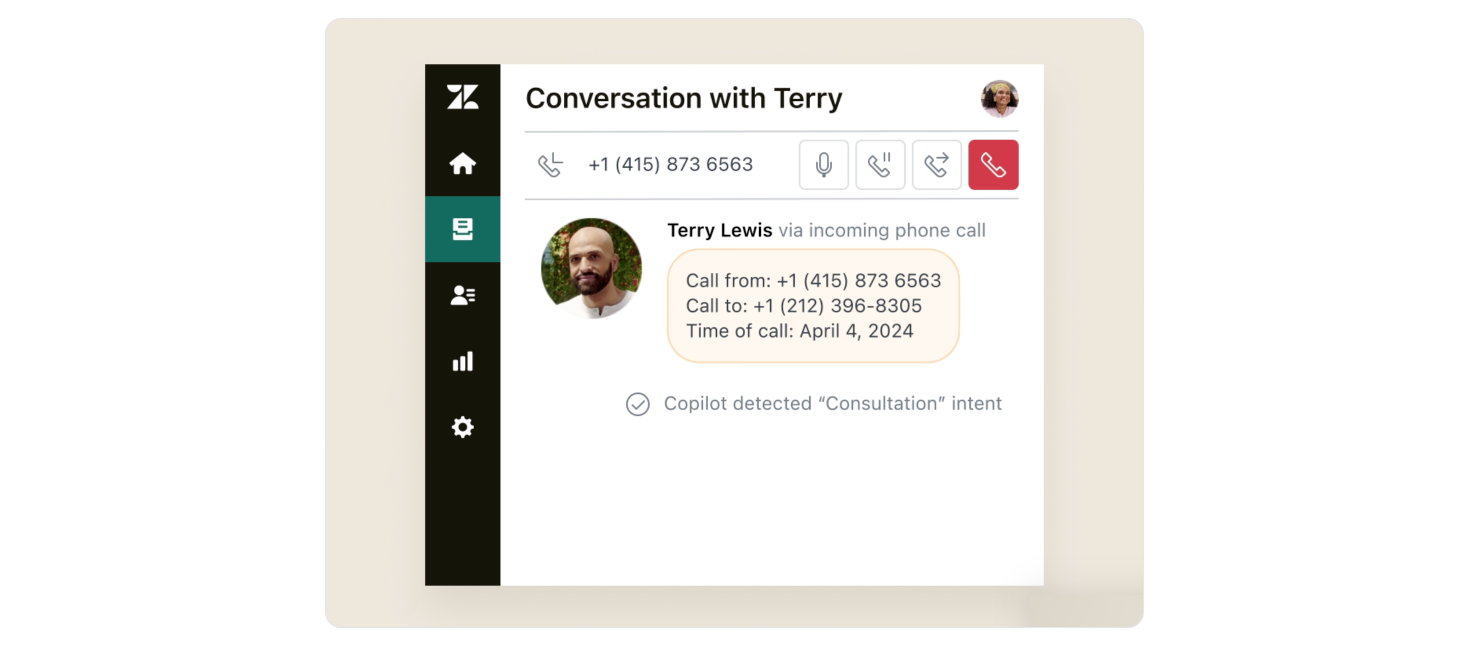

During the call Agents will have the same context available as they would have for all other Zendesk channels. The information gathered for routing (Sentiment, Intent, Language) is displayed in Agent Workspace, and the knowledge panel will provide relevant answers based on the content of the conversation.

Agents will also see a summary of the conversations routed through the AI Agent so they can get quickly up to speed with the customers’ needs, without needing to read through a transcript of the entire conversation.

These features are currently not as coming soon, so we can expect to see them later this year, or even early next year.

Copilot for voice

During a call things might change. A customer might call asking about a product feature, and might end up asking for an exchange since their product does not have that feature and they’d rather purchased another product. Or customers inquiry about an existing reservation and end-up upgrading to VIP tickets.

Whatever the scenario, during a call the context and intent of the conversation will evolve continuously, and agents might need to dive into multiple processes.

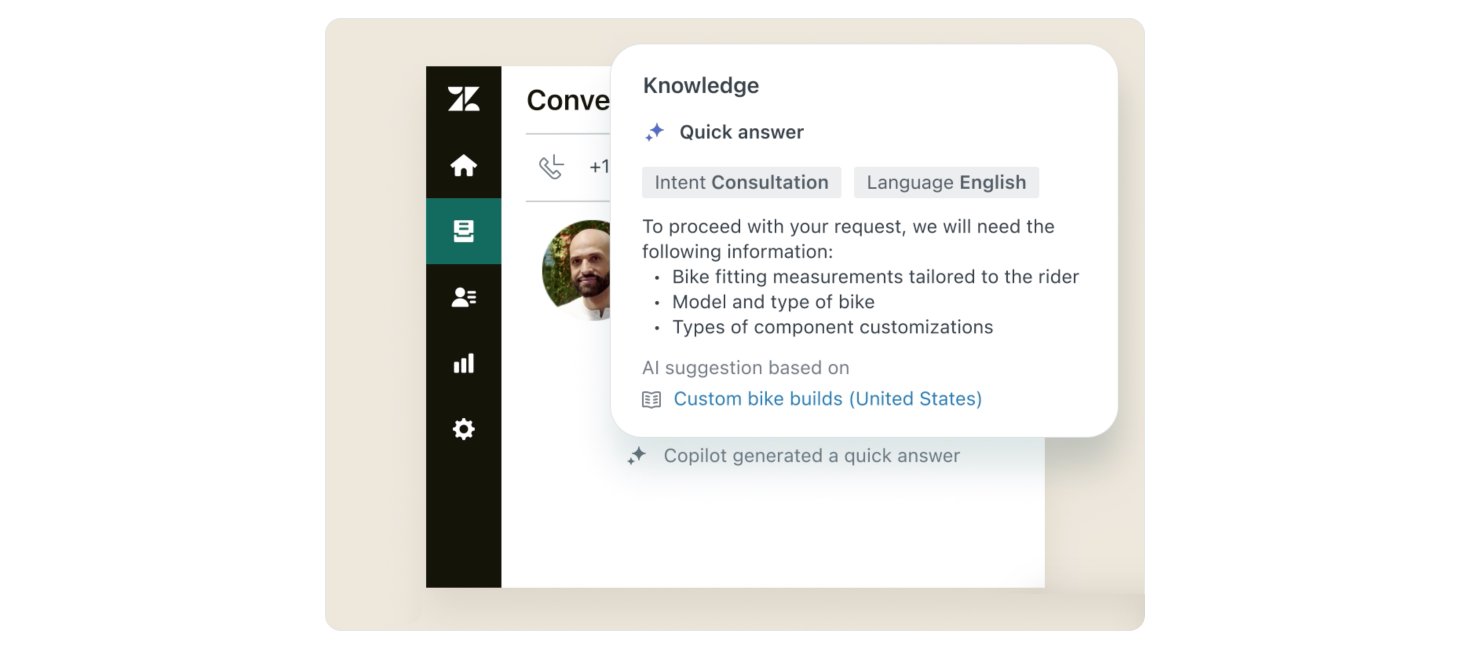

This is where the new Agent Copilot for Voice comes in. Announced for early next year it will continuously provide agents with new suggested actions on the ticket by listening for new intents, and than offering quick replies in the Knowledge Panel which agents can use to reply to customers.

There’s no word yet on other auto-assist Agent Copilot features as we have for messaging and email, but just the fact that it surfaces relevant articles and content for agents will make handling calls a lot easier.

Post Call - Transcription & Summarization

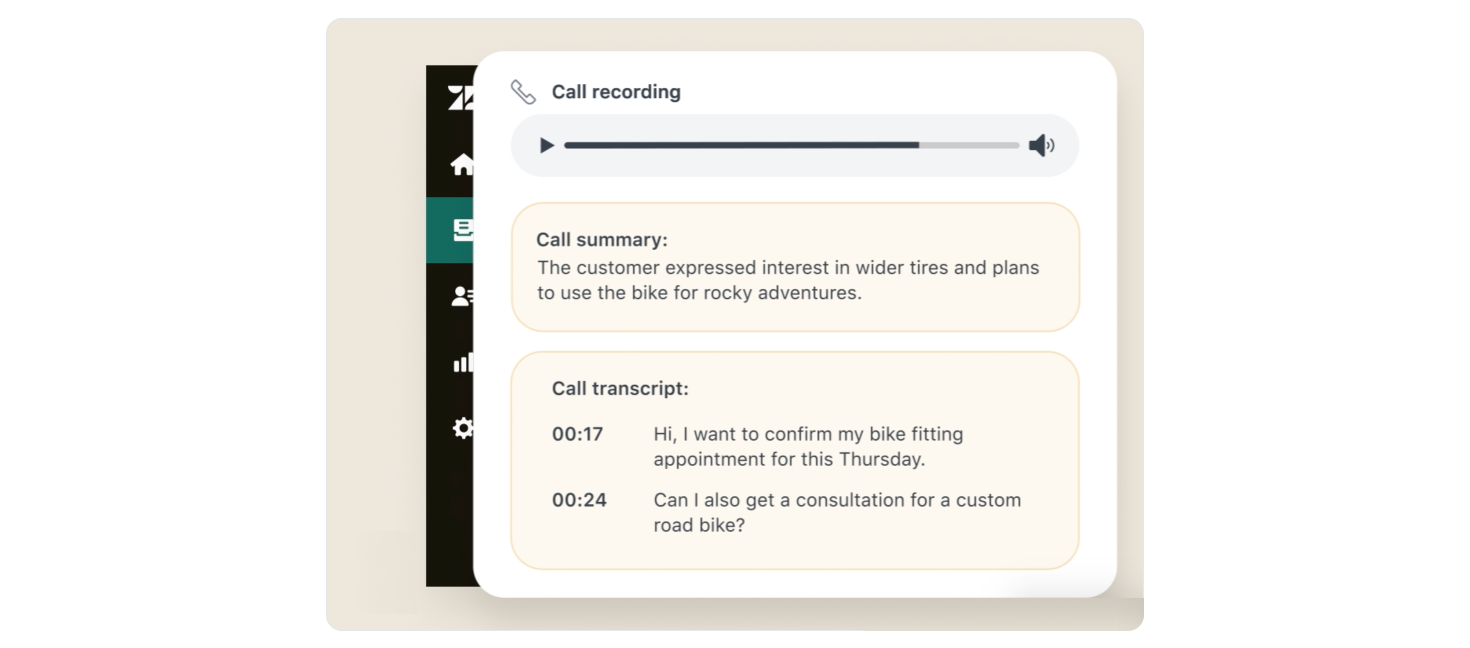

Once the call has concluded, Al generates a full transcript and brief summary of the conversation and adds it to the ticket. This feature was already launched last year in EAP as Generative AI for Zendesk Voice and nicely fits in Zendesk's new Voice lineup.

In my preview of the Generative AI for Voice upon its release last year I noted the following:

(…) That being said, one major feature is currently missing for me, and that is the lack of integration with the other Zendesk AI and Intelligent triage features.

If you have a summary of the call, why doesn't this also feed the Summary option in the Intelligence Panel? And why can't we see the Intent or Sentiment of the call? All the data is obviously there. And if I copy the conversation transcript into a new ticket, I get the results on the right.

But, taking into account that feature is in an EAP, I'm sure this will all be nicely integrated once the feature work wraps up and the internal data gets hooked up.

Hindsight is 20/20 and it’s clear I was only partially right in my remarks. Adding a summary to the context panel was a bad idea. A phone call is only part of the ticket live cycle and multiple calls can be part of one ticket. So having a summary for each call as a note on the ticket is clearly the right way to go.

But it’s nice to see that the other feature requests I had, namely intent and routing are now part of the Zendesk Voice offering.

Quality Control - Quality Assurance

From incoming call to escalation to handing the call and wrapping it up, we finally get to the last part of the call journey: Quality Control.

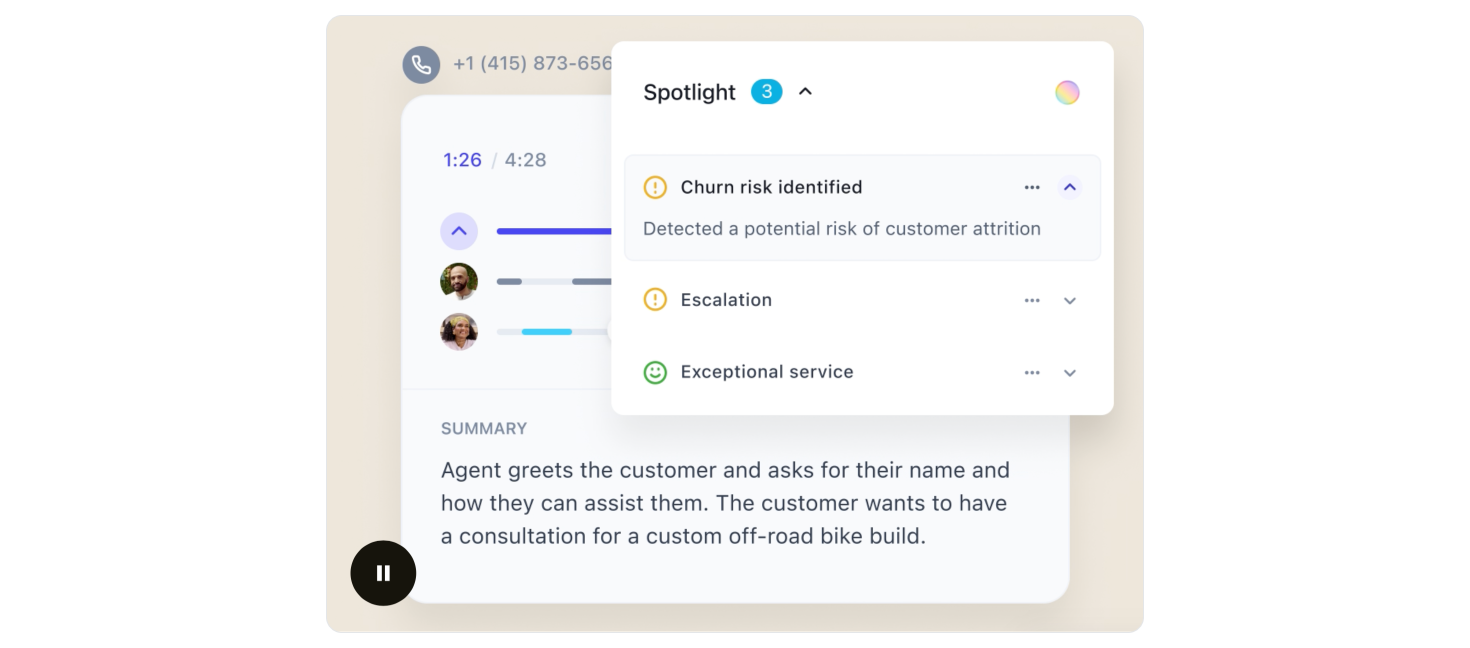

Announced and released in August the year, Zendesk QA for Voice is a new part of Zendesk’s new QA offering. Zendesk QA runs an automatic review over all your tickets and scores them according to elements like do agents understand the customer, are they friendly and have the right tone of voice, are they working towards a solution,..

Similarly it can spotlight potential risks like churn, bad csat, tickets that require repeated escalations,…

With the new Voice QA Zendesk expands this capability from traditional text-based channels to voice and uses conversation transcripts to audit your tickets.

Wrap up

So, that’s the new Zendesk Voice. A complete rework of Zendesk Talk featuring AI agents to automate incoming calls, integration with omnichannel routing to make sure the right agent picks up the phone, insights via summaries and intent detection, Copilot to assist agents during the call, and Voice QA that uses transcriptions and summaries to provide actionable insights after the call wraps up.

An AI Agent. An Agent Copilot. Zendesk QA.

An AI Agent.

Copilot

Zendesk QA.

Are you getting it? This is not a single product. These are three separate products. And they’re calling it Zendesk Voice.

All kidding aside, getting access to all the new features does require some work.

- You need Zendesk Suite to get access to a phone number and the overall voice capabilities in Zendesk.

- You need to buy Zendesk Advanced AI to get access to Agent Copilot with its quick replies, sentiment and intent analysis, and transcription and summary features.

- Zendesk QA brings you the new Voice QA features

- And finally, you need to get PolyAI to get the new AI Agent for Voice.

Long gone are the days of just buying Zendesk Suite and getting everything, and it feels we’re moving back to the days where Zendesk was a set of separate SKUs you could mix and match. The sole difference is that were previously you bought Zendesk to mix and match the channels you needed (talk, voice, help center, support,…), you now buy different add-ons depending on the kind of AI powered assist you need.

Do you want to deflect inquiries across channels and offer automated resolutions? You need to invest in AI Agents.

Do you want to assist agents and make their work more efficient? Look into Advance AI to empowers Agent Workspace. Want to improve the way your team works and get actionable insights? Ask for Zendesk QA.

I do like this new Zendesk Voice. Zendesk Talk was a bit long in the tooth and often overlooked when I work with Zendesk with customers reaching out to third party providers to handle their phone calls. Now more of these customers might be able to handle their conversations without requiring a third party system, although I hope the incoming calls and escalation flows will also become available to those third party providers via the Zendesk Talk partner edition APIs.